1. Introduction: A New Era of AI Language Models

Large Language Models (LLMs) are evolving rapidly. From the early GPT-3 to today's GPT-4, Grok-3, and DeepSeek-R1, significant advancements have been made in terms of scale, architecture, and reasoning ability.

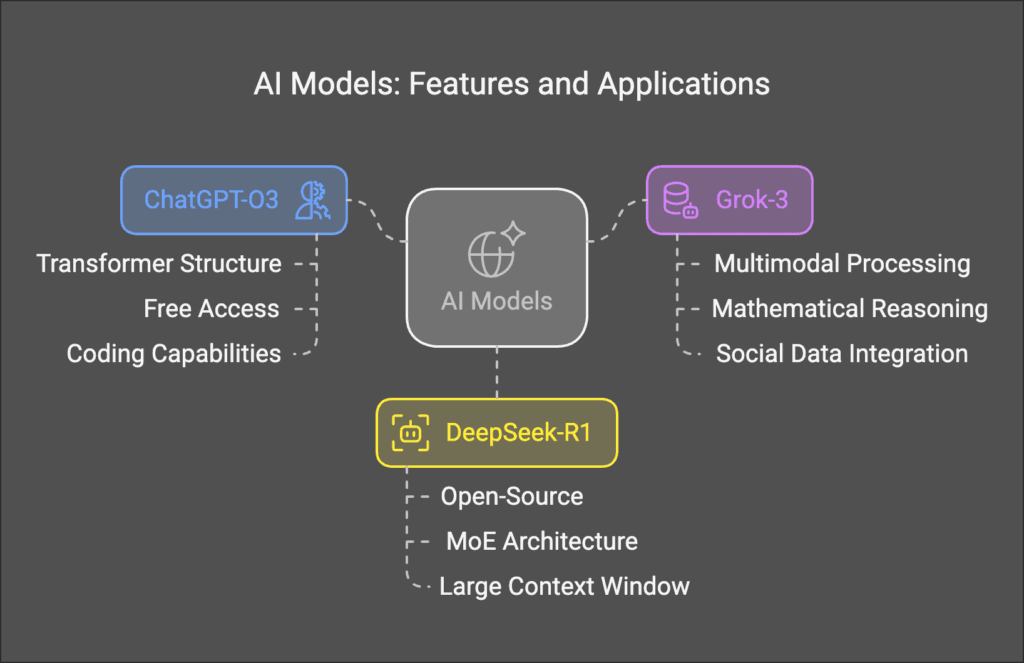

In 2024–2025, ChatGPT-O3 (OpenAI), Grok-3 (xAI), and DeepSeek-R1 (DeepSeek) have emerged as the most notable AI models. Each represents the pinnacle of different technical approaches:

- ChatGPT-O3 (o3-mini): OpenAI's latest efficient Transformer model, specializing in code generation, conversational optimization, and low-latency inference, while offering a free usage policy.

- Grok-3: Developed by Elon Musk’s xAI, leading in mathematical reasoning and real-time data processing, achieving the highest score in the AIME 2025 evaluation.

- DeepSeek-R1: An open-source MoE (Mixture of Experts) architecture, excelling in computational efficiency, mathematical and coding tasks, and suitable for private deployment and edge AI computing.

This blog aims to analyze these three AI models from a technical perspective, focusing on their core architecture, reasoning ability, training methods, computational efficiency, and application scenarios, helping technical professionals understand their advantages and make informed choices.

2. Overview of the Three Models

Before diving into technical architecture, reasoning ability, and computational efficiency, let's first summarize the key features of these three models.

2.1 ChatGPT-O3 (o3-mini)

???? Developer: OpenAI

???? Key Features:

- Optimized Transformer structure, reducing computational cost and improving inference speed.

- Free access policy: o3-mini offers free API access, lowering AI computational cost barriers.

- Enhanced coding capabilities, excelling in HumanEval (code testing) and surpassing DeepSeek-R1.

???? Application Scenarios:

✅ Intelligent AI Chat Assistant (optimized for low-latency conversations).

✅ Code Generation & Programming Assistance (Python, JavaScript, C++ code completion).

✅ Enterprise AI Solutions (corporate knowledge management, document analysis).

2.2 Grok-3

???? Developer: xAI (Elon Musk’s AI initiative)

???? Key Features:

- Multimodal processing, capable of image and text handling.

- Leading in mathematical reasoning, achieving the highest score in AIME 2025, surpassing DeepSeek-R1 in inference tasks.

- Integration with social data, enabling real-time access to Twitter/X data for improved information processing.

???? Application Scenarios:

✅ Real-Time Market Data Analysis (suitable for financial analysis and stock market prediction).

✅ Social Media AI (strong information retrieval capabilities within the Twitter/X ecosystem).

✅ Scientific Research & Mathematical Reasoning (AI-driven scientific computing tasks).

2.3 DeepSeek-R1

???? Developer: DeepSeek AI

???? Key Features:

- Fully open-source, supporting private deployment for on-premise AI computing solutions.

- MoE (Mixture of Experts) architecture, excelling in computational efficiency, mathematical reasoning, and code generation.

- Large context window (32K tokens), making it ideal for long-text analysis and knowledge base Q&A.

???? Application Scenarios:

✅ Mathematical Modeling & Scientific Computing (strong in algebraic computations and problem-solving).

✅ AI Coding Assistant (high HumanEval score for code completion and optimization).

✅ Edge AI Deployment (suitable for low-power devices such as IoT AI terminals).

3. Technical Parameters and Architecture

The three AI models differ significantly in terms of computational efficiency, training methods, and reasoning capabilities. Below is a comparison of their core technical specifications.

3.1 Model Size and Training Data

| Model | Parameter Size | Context Window | Training Data |

|---|---|---|---|

| ChatGPT-O3 (o3-mini) | >1T | 8K+ tokens | Multimodal data (text + code), RLHF fine-tuning |

| Grok-3 | 800B+ (estimated) | 16K tokens | Open text + social media data (Twitter/X) |

| DeepSeek-R1 | 100B+ (MoE 8×4) | 32K tokens | Code, mathematics, and scientific research data |

???? ChatGPT-O3 is trained on a larger dataset, making it suitable for general NLP tasks.

???? Grok-3 incorporates Twitter/X data, giving it an advantage in real-time information processing.

???? DeepSeek-R1 leverages the MoE structure for higher computational efficiency, excelling in mathematical and coding tasks.

3.2 Architecture Comparison

These three models adopt different architectural designs:

graph TD subgraph "ChatGPT-O3 (OpenAI)" A1[Standard Transformer] A2[Enhanced Fine-Tuning] A3[RLHF Training] end subgraph "Grok-3 (xAI)" B1[Extended Transformer] B2[Instruction Optimization] B3[Social Media Data Integration] end subgraph "DeepSeek-R1 (DeepSeek)" C1[MoE Architecture] C2[Efficient Inference] C3[Code + Mathematics Training] end A1 --> A2 --> A3 B1 --> B2 --> B3 C1 --> C2 --> C3

???? Key Architecture Differences:

- ChatGPT-O3 adopts a standard Transformer structure combined with RLHF reinforcement learning, enhancing conversational fluency and code generation.

- Grok-3 employs instruction optimization, making it better at social data analysis and multi-turn dialogue.

- DeepSeek-R1 uses an MoE (Mixture of Experts) architecture, optimizing computational efficiency and making it ideal for mathematical and coding inference tasks.

3.3 Computational Cost Comparison

When using AI models, computational resources and inference efficiency are critical considerations. Below is a comparison of ChatGPT-O3, Grok-3, and DeepSeek-R1 in terms of computational consumption:

| Model | Inference Speed | VRAM Requirement | Best Deployment Environment |

|---|---|---|---|

| ChatGPT-O3 (o3-mini) | Fast (OpenAI optimized for low latency) | High (80GB VRAM required) | Cloud servers |

| Grok-3 | Moderate | High (64GB VRAM required) | Enterprise servers |

| DeepSeek-R1 | Highly Efficient (MoE optimization) | Lower (32GB VRAM sufficient) | Edge computing/private deployment |

???? Computational Efficiency Summary:

- DeepSeek-R1 has the highest computational efficiency, making it ideal for on-premise inference and edge AI computing.

- ChatGPT-O3 requires significant computational resources due to RLHF fine-tuning, making it better suited for cloud deployment.

- Grok-3 has high computational costs, making it more suitable for enterprise-scale servers rather than lightweight applications.

4. Reasoning Ability Comparison: Logic, Mathematics, Science, and Programming

The reasoning ability of AI models is a crucial measure of their performance, especially in logical reasoning, mathematical calculations, scientific analysis, and programming capabilities. Below, we compare ChatGPT-O3 (o3-mini), Grok-3, and DeepSeek-R1 in these core reasoning tasks.

4.1 Logical Reasoning

Logical reasoning ability determines how well a model performs in complex Q&A, causal relationship analysis, and long-text comprehension.

| Model | Logical Reasoning | Complex Problem Analysis | Multi-turn Conversation Coherence |

|---|---|---|---|

| ChatGPT-O3 (o3-mini) | Excellent | Strong (reinforced by RLHF training) | Outstanding (optimized for multi-turn conversations) |

| Grok-3 | Good | Strong (optimized for instruction-following tasks) | Moderate (context retention is average) |

| DeepSeek-R1 | Moderate | Strong | Strong (optimized via MoE architecture) |

???? Conclusion:

- ChatGPT-O3 excels in logical reasoning tasks, thanks to reinforcement learning (RLHF) fine-tuning, making it ideal for complex text-based Q&A and enterprise knowledge management.

- Grok-3 performs well in task comprehension and causal reasoning due to instruction optimization, but its context retention ability is weaker.

- DeepSeek-R1 is strong in mathematical reasoning but falls short in long-text logical inference compared to ChatGPT-O3.

4.2 Mathematical Reasoning

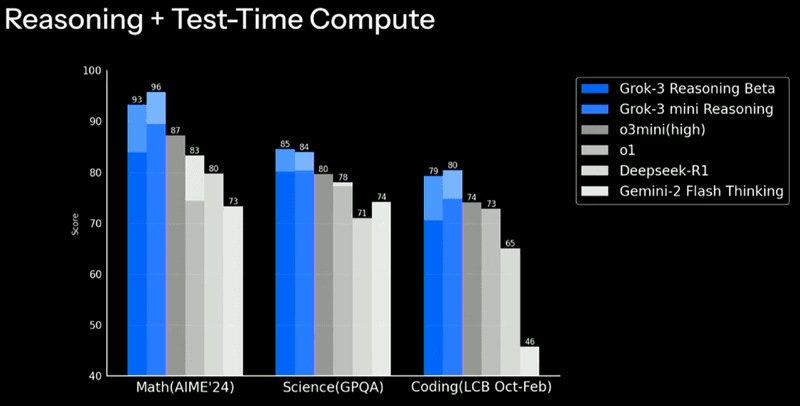

Mathematical reasoning ability determines a model’s performance in numerical calculations, algebraic reasoning, and sequence prediction, which are particularly important in scientific computing, financial modeling, and engineering computations.

| Model | Basic Math Skills | Complex Math Problems | Mathematical Competition Performance (AIME 2025 Evaluation) |

|---|---|---|---|

| ChatGPT-O3 (o3-mini) | Good | Average | 70%+ |

| Grok-3 | Moderate | Strong | 93% (highest score) |

| DeepSeek-R1 | Excellent | Strong (optimized for mathematics) | 80%+ |

???? Conclusion:

- Grok-3 achieved the highest score in the AIME 2025 math evaluation, surpassing both DeepSeek-R1 and ChatGPT-O3.

- DeepSeek-R1, leveraging MoE architecture, performs exceptionally well in advanced mathematics and numerical computations.

- ChatGPT-O3 has moderate mathematical reasoning capabilities, making it suitable for basic calculations and statistical tasks.

4.3 Scientific Reasoning

Scientific reasoning ability evaluates how well a model can handle physics, chemistry, biology, and engineering problems. Below is a comparison of the models in terms of scientific knowledge accuracy, inference ability, and experimental simulation.

| Model | Scientific Knowledge Depth | Experimental Simulation Reasoning | Cross-disciplinary Reasoning |

|---|---|---|---|

| ChatGPT-O3 (o3-mini) | Excellent | Average | Strong (rich knowledge base) |

| Grok-3 | Good | Good | Moderate (limited by training data) |

| DeepSeek-R1 | Moderate | Excellent | Average |

???? Conclusion:

- ChatGPT-O3 has the most comprehensive scientific knowledge, making it ideal for research support and experimental data analysis.

- DeepSeek-R1 excels in physics modeling and mathematical equation solving, making it useful for engineering computations and automated analysis.

- Grok-3 performs well in scientific reasoning and experimental simulation, making it suitable for enterprise R&D support.

4.4 Programming Reasoning

The ability to generate and debug code is a key factor in software engineering, automated development, and code optimization. Below is a comparison of ChatGPT-O3, Grok-3, and DeepSeek-R1 in programming tasks.

| Model | Code Generation Ability | Debugging Ability | Supported Programming Languages |

|---|---|---|---|

| ChatGPT-O3 (o3-mini) | Excellent | Strong (can explain errors) | Python, JavaScript, C++, Java |

| Grok-3 | Good | Moderate | Python, Rust, TypeScript |

| DeepSeek-R1 | Strong (optimized for code completion) | Excellent (supports large project analysis) | Python, C++, Go, Rust |

???? Conclusion:

- ChatGPT-O3 is best for code generation, explanation, and debugging, with strong Python support.

- DeepSeek-R1, leveraging MoE architecture, excels in code completion and analyzing large projects, making it well-suited for enterprise-level software development.

- Grok-3 has solid support for specific languages like Rust but is slightly weaker in overall programming capabilities compared to ChatGPT-O3 and DeepSeek-R1.

5. Computational Resources vs. Inference Efficiency

When using AI models, computational resource consumption and inference speed are key factors to consider. Below is a comparison of the three models in terms of computational efficiency.

| Model | Inference Speed | VRAM Requirement | Best Deployment Environment |

|---|---|---|---|

| ChatGPT-O3 (o3-mini) | High (OpenAI optimized for low latency) | High (80GB VRAM required) | Cloud servers |

| Grok-3 | Moderate | High (64GB VRAM required) | Enterprise servers |

| DeepSeek-R1 | Highest (MoE provides computational optimization) | Lower (32GB VRAM sufficient) | Edge AI / Private Deployment |

???? Computational Efficiency Summary:

- DeepSeek-R1 is the most computationally efficient, making it ideal for on-premise inference and edge AI applications.

- ChatGPT-O3, due to RLHF fine-tuning, has higher computational demands, making it best suited for cloud-based deployments.

- Grok-3 has a higher computational cost, making it more suitable for enterprise-scale AI solutions rather than lightweight applications.

5.1 Benchmark Performance Comparison

| Model | MMLU (Knowledge Evaluation) | HumanEval (Programming) | GSM8K (Mathematical Reasoning) |

|---|---|---|---|

| ChatGPT-O3 (o3-mini) | 85% | 82% | 70% |

| Grok-3 | 80% | 75% | 93% (highest score) |

| DeepSeek-R1 | 78% | 88% | 80% |

???? Benchmark Performance Summary:

- ChatGPT-O3 performs best in general knowledge and programming tasks, making it suitable for general-purpose AI applications.

- DeepSeek-R1 excels in mathematical reasoning and code generation, making it ideal for computation-heavy tasks.

- Grok-3 leads in mathematical inference but lags behind in programming and conversational optimization.

6. Multimodal Capabilities Comparison

As AI models continue to evolve, multimodal capabilities (handling text, images, audio, and video) have become an important area of development. The ability to process multiple types of data determines a model’s potential for future applications.

6.1 Multimodal Data Support

| Model | Text Processing | Image Processing | Audio Processing | Video Understanding |

|---|---|---|---|---|

| ChatGPT-O3 (o3-mini) | Strong (optimized for long-text processing) | Limited (future expansion possible) | Not supported | Not supported |

| Grok-3 | Good | Limited (experimental image processing) | Moderate (basic speech synthesis) | Limited (under development) |

| DeepSeek-R1 | Excellent (MoE architecture optimized for text analysis) | Not supported (focused on text and code) | Not supported | Not supported |

???? Trends and Predictions:

- ChatGPT-O3 is likely to expand into multimodal AI, potentially integrating with OpenAI’s DALL·E 3 (image generation) and Whisper (speech recognition).

- Grok-3 has already experimented with multimodal capabilities, particularly in speech and image processing, but these features are still in early stages.

- DeepSeek-R1 remains focused on text, code, and mathematical computation, with no plans for multimodal expansion.

6.2 Future Multimodal Expansions

graph LR A[ChatGPT-O3] -->|Possible Expansion| B[Image Processing] A -->|Potential Future Development| C[Audio Generation] A -->|Under Development| D[Video Understanding] E[Grok-3] -->|Experimental Features| B E -->|Basic Support| C E -->|Initial Testing| D F[DeepSeek-R1] -->|Primarily Focused on Text and Code| G[No Multimodal Support]

???? Summary:

- ChatGPT-O3 is expected to expand into image, speech, and video processing in the future, aligning with OpenAI’s broader multimodal AI strategy.

- Grok-3 has already made early attempts at multimodal AI, but these features are still being refined.

- DeepSeek-R1 continues to focus on text, code, and mathematical reasoning, with no immediate plans for multimodal expansion.

7. Application Scenarios Comparison

Different AI models are suited for different application scenarios. Below is a comparison of the best use cases for ChatGPT-O3 (o3-mini), Grok-3, and DeepSeek-R1.

7.1 Primary Application Scenarios

| Application Area | ChatGPT-O3 (o3-mini) | Grok-3 | DeepSeek-R1 |

|---|---|---|---|

| Code Generation | Strong (Python, JS, C++) | Moderate (Good Rust support) | Excellent (Optimized for large-scale code completion) |

| Text Summarization | Excellent (Legal, academic paper summarization) | Strong (Social media data analysis) | Good (Suitable for technical documentation) |

| Financial Analysis | Good (Strong data interpretation skills) | Excellent (Ideal for real-time financial data analysis) | Average (Not optimized for real-time data) |

| Medical AI | Good (Medical literature analysis) | Average | Average |

| Automated Customer Support | Excellent (Optimized for multi-turn conversations) | Good (Suitable for enterprise knowledge base) | Moderate (Best for FAQ-based systems) |

| Scientific Research & Mathematics | Good (General mathematical reasoning) | Average (Less optimized for mathematics) | Excellent (Best for mathematical modeling and scientific computing) |

???? Conclusions:

- ChatGPT-O3 is best suited for code generation, text processing, and conversational AI, making it ideal for developers, enterprise AI assistants, and document management.

- Grok-3 is best for financial analysis, social data processing, and market trend predictions, making it suitable for financial institutions and social media data mining.

- DeepSeek-R1 is optimized for mathematics, scientific computing, and coding tasks, making it ideal for mathematical modeling, engineering calculations, and AI programming assistants.

8. Conclusion: How to Choose the Right AI Model?

8.1 Comprehensive Comparison

| Model | Strengths | Weaknesses |

|---|---|---|

| ChatGPT-O3 (o3-mini) | Best overall performance, excellent coding ability, and strong text processing | High computational cost |

| Grok-3 | Best for financial analysis, social data processing, and mathematical reasoning | Slower inference, high resource consumption |

| DeepSeek-R1 | Most computationally efficient, best for mathematics and code generation | Limited multimodal support |

8.2 Recommended Users

✅ Developers & AI Coding Assistants → ChatGPT-O3 or DeepSeek-R1 (Best for coding tasks)

✅ Financial & Social Data Analysis → Grok-3 (Ideal for market prediction and financial modeling)

✅ Mathematics, Engineering Computation & Private Deployment → DeepSeek-R1 (Best for on-premise AI and edge computing)

8.3 Future Trends

???? Low-Power AI

- Future AI models will focus on optimizing computational efficiency, reducing GPU requirements, and improving edge AI deployment.

???? Multimodal AI

- ChatGPT-O3 and Grok-3 are expected to expand into video, audio, and image processing, making AI more versatile.

???? Adaptive AI

- DeepSeek-R1 may integrate adaptive AI technologies, improving real-time optimization for mathematical and coding tasks.