As smart manufacturing and industrial automation continue to evolve, traditional acoustic detection and single-sensor systems are revealing limitations. That’s why multimodal AI—which integrates voice recognition AI, video surveillance, and environmental sensing—is emerging as a more intelligent and robust solution for industrial anomaly detection and fault response.

Why Industry Is Adopting Multimodal AI for Smarter Monitoring

Traditionally, industrial health monitoring and security relied on manual inspection or single-sensor systems focused on sound, vibration, or temperature. These systems often faced issues such as:

- High false alarm rate: Environmental noise interference makes it hard to identify sound events precisely, causing missed or false alerts.

- No real-time traceability: Sound alone can't fully reconstruct incidents or support timely review and localization.

- Slow response: Manual verification and response delay the best time for resolution.

- Limited compatibility: Diverse factories and environments require different signal models, making general solutions hard to deploy.

With the spread of Industrial IoT (IIoT), edge computing, and AI chips, more enterprises are exploring smart surveillance systems that combine "audio + video + environmental" data. Solution providers are now upgrading from legacy single-signal analysis platforms to AI-powered multimodal perception systems to improve value-added capabilities and boost competitiveness.

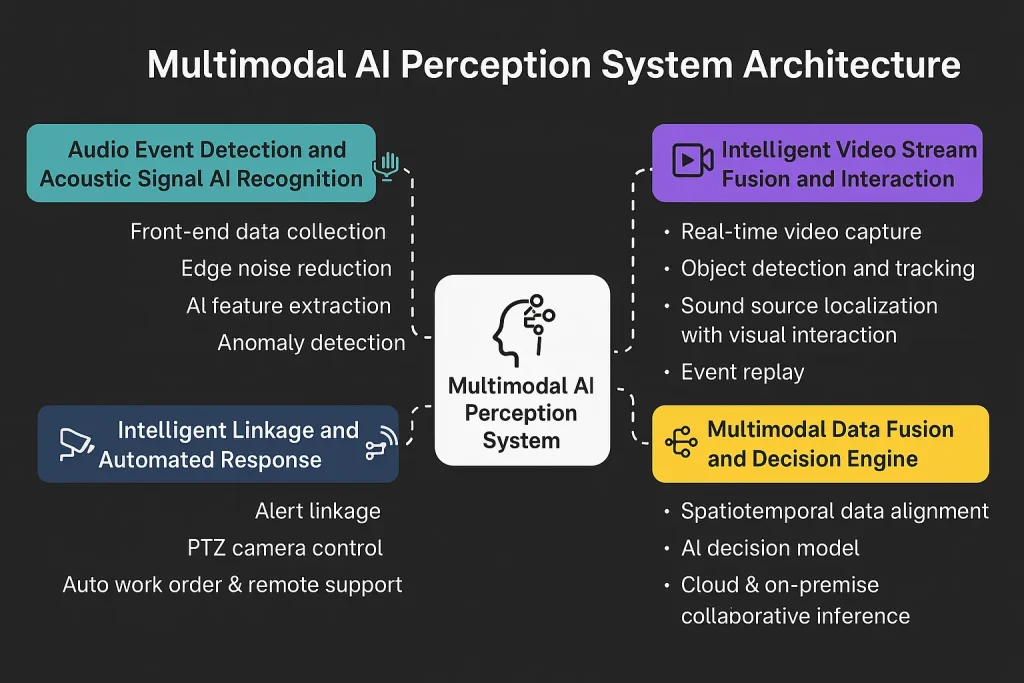

Core Architecture of Multimodal AI Systems for Voice and Video Fusion

AI multimodal sensing systems integrate various sensors (microphone arrays, cameras, temperature/humidity, etc.) and use AI inference engines (local or cloud-based) to deliver key capabilities:

- Sound event detection and recognition: Using deep neural networks (e.g., CNN, Transformer) to distinguish abnormal noise, mechanical faults, alarms, etc.

- Video stream fusion and object detection: Synchronously analyze video footage and link sound sources to visual tracking, improving event reconstruction.

- Multimodal data correlation and decision-making: Build spatial-temporal fusion models from sound, video, and environmental data to reduce false alarms and enable automatic event classification.

- Intelligent linkage and remote response: Automatically trigger alarms, control PTZ cameras, or initiate remote inspection/workflows.

--- title: "AI Multimodal Perception System Architecture" --- graph TD; A["Sound Collection (Mic Array)"] --> C["Multimodal AI Processing Engine"] B["Video Collection (Camera)"] --> C D["Environmental Sensors (Temp/Humidity/Gas)"] --> C C --> E["Anomaly Detection & Event Recognition"] E --> F["Smart Linkage & Remote Alerts"] F --> G["Auto Work Order / Remote Handling"]

Technologies Behind Voice Recognition AI and Video-Based Multimodal AI

The core strength of an AI multimodal system lies in multi-source data fusion and an intelligent decision engine. Below is a detailed breakdown of modules and tech implementation.

1. Sound Event Detection & Acoustic AI Recognition

- Frontend Collection: Industrial-grade microphone arrays capture audio signals; local A/D conversion yields high-resolution raw audio.

- Edge Noise Reduction: Use time/frequency domain filters (Wiener, FFT, wavelet transform) to suppress background noise and boost signal-to-noise ratio.

- AI Feature Extraction: CNN and Transformer models extract deep features like Mel spectrogram and temporal patterns to identify key sound events.

- Anomaly Classification: Match known acoustic signatures or use unsupervised learning to discover new anomalies.

2. Smart Video Fusion & Linkage

- Real-time Video Capture: Use high-definition RTSP/Onvif-compatible cameras for 24/7 monitoring across the site.

- Object Detection & Tracking: AI models (e.g., YOLOv8, DETR) identify machines, people, and relevant zones in real time.

- Sound-Visual Synchronization: Microphone arrays locate sound sources; PTZ cameras auto-focus on suspicious zones—enabling "sound-driven visual tracking."

- Event Review: Synchronize sound and video to auto-record and tag event clips for forensics and diagnostics.

3. Multimodal Fusion & Decision Engine

- Temporal-Spatial Alignment: Sound, video, and environmental data are synced via timestamps to form an integrated event stream.

- AI Decision Models: Use multimodal Transformers, GNNs, etc., to learn inter-event logic and reduce false positives.

- Cloud + Edge Inference: Edge gateways screen events locally; complex cases are escalated to cloud AI for deeper analysis, balancing speed and accuracy.

4. Smart Linkage & Auto Response

- Alert Automation: Upon anomaly detection, the system pushes alerts via SMS, app, WeChat/Work WeChat, etc., and can trigger on-site alarms.

- Camera PTZ Control: Automatically adjusts camera angles for multi-angle review and tracking.

- Auto Work Orders & Remote Support: For serious incidents, generate work orders and send to maintenance teams via app/system for remote resolution and tracking.

Real-world Use Cases of Multimodal AI in Smart Surveillance

Smart Factories & Unmanned Production Lines

- Acoustic fault detection of machinery, fused with visual positioning for rapid fault localization.

- Linked robotic arms/AGVs for automatic avoidance and production recovery.

Smart Campuses & Building Security

- Recognize breaking glass, screaming, impact sounds; auto-track source with surveillance cameras.

- Enable distributed anomaly detection across floors and zones with centralized remote management.

Energy & Infrastructure Maintenance

- Detect leaks, explosions, or abnormal sounds in substations, pump rooms, gas pipelines; auto-trigger video lockdown for safety.

Remote Unattended Sites

- Combine sound, video, and environmental sensors for 24/7 monitoring of remote or field facilities.

- Auto-report anomalies and dispatch remote work orders without on-site staff.

Multimodal AI vs Traditional Monitoring Systems: Accuracy, Speed & ROI

| Comparison | Traditional Audio/Video | Multimodal AI System |

|---|---|---|

| Accuracy | Prone to noise, high false alarms | Audio + Video + Environment greatly enhances robustness |

| Response Speed | Manual review, delayed action | Automatic detection, smart linkage, real-time alerts |

| Traceability | Separate audio/video storage | Unified event archive, easier review, better reconstruction |

| Scalability | Needs case-by-case adaptation | Fast model iteration, easier deployment to new scenes |

| Deployment | Limited sensors, few interfaces | Unified multi-source collection, cloud/edge ready |

| Maintenance | Labor-intensive | Remote ops, auto work orders, saves manpower |

--- title: "Multimodal AI Anomaly Detection Flow" --- flowchart TD A["On-site Multi-source Collection"] --> B["Local Preprocessing & AI Event Detection"] B --> C{"Anomaly Detected?"} C -- "No" --> D["Normal Operation"] C -- "Yes" --> E["Camera Tracking via Smart Linkage"] E --> F["Remote Alert / Work Order Dispatch"] F --> G["Cloud Event Archive & Review"]

Best Practices for Multimodal AI Deployment in Industrial Environments

Deploying AI multimodal sensing systems requires consideration across hardware, network, software, and maintenance. Based on real-world projects, key tips include:

1. Hardware & Sensor Layout

- Mic Array Selection: Use industrial-grade noise-resistant mics with directional pickup and noise-canceling algorithms.

- HD Camera Setup: Support low-light, infrared night vision, and PTZ control for all-weather, full-scene coverage.

- Environmental Sensor Integration: Add temp/humidity, gas, vibration sensors for richer event insight.

2. Network & Data Architecture

- Edge-first Processing: Edge AI gateways handle local screening to reduce upload load and latency.

- Cloud Collaboration: Cloud handles complex analysis, model training, OTA updates—keeping AI evolving.

- Data Security Compliance: Encrypt all sensitive audio/video, and follow GDPR or other relevant regulations.

3. Platform & Algorithm Development

- Open APIs & Protocol Support: Ensure support for RESTful, MQTT, WebSocket, etc., for third-party integration.

- Multimodal Algorithm Support: Use AI frameworks (PyTorch, TensorFlow, OpenVINO) that support multi-task and heterogeneous data fusion.

- Custom Training & Scene Adaptation: Allow local annotation and fine-tuning for different industries/environments to improve generalization.

4. Deployment & Maintenance

- Phased Rollout Strategy: Start with pilot zones, then expand gradually for risk control and knowledge reuse.

- Remote Monitoring & Visualization: Backend should offer health monitoring, online alerts, and event review to reduce manual workload.

- Continuous Optimization: Periodically review false positives/negatives and adjust sensor placement or AI parameters.

The Future of Video Surveillance and Anomaly Detection Powered by Multimodal AI

Trends & Outlook

AI multimodal perception is reshaping industrial monitoring and smart security with its automation, full-scene coverage, and high accuracy. Looking ahead:

- Edge-cloud synergy & self-evolving AI: Edge devices + cloud AI will dominate, enabling seamless model updates and adaptation.

- Foundation Models for Perception: Audio-visual foundation models will enable richer event understanding and reasoning.

- Deeper OT/IT Integration: Perception systems will link with MES, SCADA, EAM for full-loop operations and predictive maintenance.

- Fully Automated Ops & Low-code Tools: Non-experts can easily customize rules and responses via low-code interfaces.

- Privacy & Explainability: Techniques like federated learning and homomorphic encryption will protect data while making AI decisions more transparent and trustworthy.

Value Proposition

AI multimodal perception combines sound, video, and environmental data to deliver unprecedented intelligence for equipment monitoring, campus security, and unattended site management. Compared to traditional methods, it offers significantly improved detection accuracy, full-process automation, remote response, and reduced human and error costs.

For solution providers, embracing this AI-driven shift and building automated, intelligent, and scene-adaptive industrial monitoring and safety products is key to staying competitive. As AI, edge computing, and foundation models evolve, smart perception systems will continue to unlock new scenarios and business value.

Frequently Asked Questions

1. What is multimodal AI in industrial monitoring?

Multimodal AI combines data from microphones, cameras, and environmental sensors to provide more accurate anomaly detection and intelligent video surveillance capabilities.

2. How does voice recognition AI improve fault detection?

By detecting abnormal sound patterns such as leaks, impacts, or alarms, voice recognition AI allows early detection and automated alerts in smart industrial systems.

3. What makes multimodal AI more effective than traditional systems?

Unlike standalone audio or video setups, multimodal AI systems deliver smart surveillance through cross-validation across data sources, reducing false alarms and improving event traceability.

4. Can I integrate video surveillance and voice recognition AI into existing setups?

Yes. Modular multimodal AI systems are designed for seamless integration, whether you're upgrading a factory, a campus, or a remote infrastructure site.