A practical guide for developers: learn what AG-UI is, where it fits in, and why it matters.

Why Agent–User Communication Needs a Standard Like AG-UI

AI agents are great at doing tasks behind the scenes. But when it comes to talking with users on the frontend, things often get messy. That’s where AG-UI (Agent–User Interaction Protocol) comes in—it makes communication between agents and UIs consistent and predictable—filling a crucial gap in the growing field of frontend AI protocols like AG-UI designed for real-time human–agent interaction.

What Is AG-UI? The Agent–UI Agent-user Interaction Protocol You Need?

What It Is

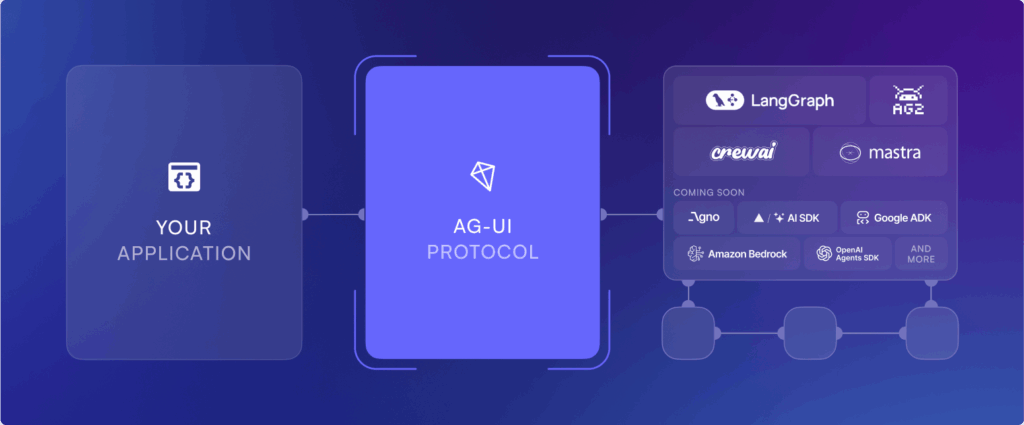

AG-UI, ag-ui agent-user interaction protocol, is a lightweight AI agent protocol created by CopilotKit. It defines a structured way for agents to send and receive events from frontend interfaces. It uses streaming JSON events over standard HTTP, SSE, or WebSocket to connect AI agents to frontend apps.

It’s designed to keep agent–UI communication fast, clear, and easy to manage—whether you’re building a simple chatbot or a full-featured Copilot interface. It was built specifically to streamline agent–UI communication and reduce frontend complexity.

How It Fits Into the Agent Ecosystem

AG-UI is part of a larger stack that includes:

- MCP (Model Context Protocol): Connects agents to tools and APIs.

- A2A (Agent-to-Agent): Manages communication between multiple agents.

- AG-UI: Bridges the gap between the agent and the user interface.

Together, these protocols help build structured, scalable agent systems.

Why Developers Love AG-UI: Simple, Streamed, Structured

Event-Based Architecture

AG-UI is built around a small, clear set of JSON event types:

- Lifecycle events: Start and end of a task

- Text events: Streamed text content (e.g., chat)

- Tool call events: When an agent wants to use a tool

- Tool result events: When a tool sends back data

- State updates: Sync frontend state (like UI modes, active cards)

Works With Any Frontend

AG-UI works with most modern frontend frameworks—React, Vue, Web Components, even Svelte. It supports both SSE and WebSocket, and ships with reference clients and connectors. Developers can speed up integration by using the CopilotKit reference implementation, which includes default event handlers and client libraries for major frontend frameworks.

How AG-UI Works: Architecture & Event Design

You can think of AG-UI as a bridge that turns backend reasoning into real-time UI actions. It enables an event-driven LLM UI, where the interface reacts instantly to AI decisions and intent.

Here's how the layers work:

--- title: "AG-UI Protocol Architecture" --- graph TD UI[Frontend UI] Listener[AG-UI Client] Protocol["AG-UI Protocol (JSON/SSE/WebSocket)"] Agent[Agent Runtime / LangGraph] UI --> Listener Listener --> Protocol Protocol --> Agent

Breakdown of Layers

- UI layer: Your interface—buttons, forms, components

- Client listener: Listens for AG-UI events, maps them to UI actions

- Protocol layer: Sends JSON messages, syncs state and stream

- Agent runtime: Where reasoning happens (e.g. LangGraph, CopilotKit)

Core Events and How They Drive Your Frontend UI

| Type | Purpose | Example |

|---|---|---|

lifecycle | Start/end of task | { "type": "lifecycle", "status": "started" } |

text-delta | Streamed content | { "type": "text-delta", "value": "Hello" } |

tool-call | Agent asks to use a tool | { "type": "tool-call", "tool": "weather", "input": "NYC" } |

tool-result | Result from the tool | { "type": "tool-result", "value": "22°C" } |

state-update | UI state sync | { "type": "state-update", "snapshot": { "mode": "edit" } } |

Example: A Copilot Event Stream

[

{ "type": "lifecycle", "status": "started" },

{ "type": "text-delta", "value": "Hi, I’m your assistant." },

{ "type": "tool-call", "tool": "weather", "input": "Beijing" },

{ "type": "tool-result", "value": "Sunny, 25°C" },

{ "type": "state-update", "diff": { "card": "weather-info" } },

{ "type": "lifecycle", "status": "completed" }

]State Sync: Snapshot + Diffs

AG-UI handles UI state like React or JSON Patch:

- Send a full snapshot at the start

- Then send only diffs to keep things fast and interactive

{ "type": "state-update", "diff": { "inputDisabled": true } }This keeps the UI responsive without needing full re-renders.

Real-World Use Cases: From Copilots to Multi-Agent UIs

Embedded Copilot UIs

AG-UI powers in-page copilots (like Notion AI or GitHub Copilot). It updates components directly from agent events—no glue code needed.

Example: On a CRM page, the user says “Add client A.”

The agent returns structured data → AG-UI triggers form autofill.

AG-UI acts as an AI copilot interaction standard, allowing frontends to reflect agent behavior without coupling to business logic.

Multi-Agent Collaborative Systems

With LangGraph UI integration, AG-UI enables structured multi-agent visual workflows. Use it with LangGraph or CrewAI to:

- Handle complex UI state

- Display long task progress

- Suggest actions or next steps

Example: A legal document Copilot that shows questions and a summary block as the agent reasons.

Protocol-First UI (No AI Logic in UI Code)

AG-UI lets you build "AI-powered UIs" without wiring business logic into the frontend.

Just listen to AG-UI events and respond.

Example: A rich Svelte + Tailwind app can respond to AI reasoning without needing extra state management logic.

AG-UI vs MCP vs A2A: Who Does What?

| Protocol | Role | UI-Focused? |

|---|---|---|

| AG-UI | Agent ↔ UI | ✅ Yes |

| MCP | Agent ↔ Tools | ❌ No |

| A2A | Agent ↔ Agent | ❌ No |

Together, they cover all parts of an agent system:

--- title: "Agent Protocol Stack: AG-UI + MCP + A2A" --- graph TD U[User] UI[Frontend UI] AGUI[AG-UI Protocol] AgentA[Agent A] AgentB[Agent B] MCP["Tool Layer (MCP)"] TOOLS[External Tools] U --> UI UI --> AGUI AGUI --> AgentA AgentA --> AgentB AgentA --> MCP AgentB --> MCP MCP --> TOOLS

Beyond AG-UI: Building Full-Stack Agent Architectures

AG-UI is one part of a modern agent system. For full-stack workflows, consider combining AG-UI with tools like:

- MCP for agent–tool execution

- A2A for agent-to-agent messaging

- LangGraph for structured reasoning paths

- Dify for workflow and RAG integration

Together, these tools form a solid base for building intelligent, modular, and scalable Copilot apps.

Learn More About AG-UI: Docs, Code, and Examples

| Title | Link |

|---|---|

| GitHub Source | github.com/ag-ui-protocol/ag-ui |

| Official Docs | docs.ag-ui.com |

| Intro Article | DEV.to – Introducing AG-UI |

| Product Launch | ProductHunt: AG-UI |

| Creator’s Blog | Medium – AG-UI is the Future |

| Live Demo | agui-demo.vercel.app |

Recommended Reading

Dify Difference Between Agent and Workflow: A Practical Guide for AI Automation

Understand how Dify separates agent reasoning from workflow orchestration—and how AG-UI can integrate seamlessly with either.

n8n vs Dify: Best AI Workflow Automation Platform?

Compare how Dify and n8n structure automation flows. Learn how AG-UI can bring intelligence to both.

Dify MCP Server: Build Modular AI Systems Like Lego

See how MCP works with AG-UI to form a modular, scalable AI architecture.

MCP(Model Context Protocol): The Universal Protocol Bridging AI with the Real World

Discover how the Model Context Protocol (MCP) transforms AI with seamless context modelling and MCP Servers. Unlock AI's potential with dynamic integration and smart execution.

AG-UI + CopilotKit Quick Start

Quickly set up AG-UI CopilotKit integration with this tutorial. Learn how to stream JSON events using the AG-UI protocol and render agent UIs seamlessly.

n8n Workflows + AG-UI

Master n8n workflows with AG-UI. Visual automation meets low-code AI tools for better orchestration and UI-driven control.

Frequently Asked Questions (FAQ)

1. What is the AG-UI Protocol?

AG-UI is an open-source, event-stream protocol that connects AI agents to any frontend via JSON-encoded events in real time.

2. Is the AG-UI Protocol tied to a specific framework?

No—AG-UI is framework-agnostic; React, Vue, Svelte or plain HTML/JS only need an event listener.

3. Do I need a dedicated backend to start?

Not necessarily; CopilotKit, LangGraph and other LLM runtimes already stream AG-UI events out of the box.

4. How is AG-UI different from REST or raw WebSockets?

It’s more than transport—it defines intent-rich event types (tool_call, ui_patch) so the UI reacts to agent reasoning instantly.

5. Which transport layers does AG-UI support?

The reference implementation uses Server-Sent Events (SSE) but the spec also permits WebSocket or HTTP-2 streams.

6. Is AG-UI open-source and what’s the license?

Yes—both the spec and SDK are MIT-licensed on GitHub, free for commercial use.

7. Can AG-UI work inside multi-agent systems?

Yes—AG-UI handles agent–UI events, while agent-to-agent coordination can use A2A protocols without conflict.

8. How do I add AG-UI to a React app fast?

Install @agui/core, wrap <AguiProvider> around your root component, and subscribe to the streamed events—done in minutes.

ag agent, AG-UI protocol, agent framework, agent frontend protocol, ai agent tools, AI Copilot, crewai tool, frontend ai, frontend AI integration, frontend AI tools, JSON event system, LangGraph, langgraph react agent, LLM UI integration, LLM UI protocol, multi-agent interaction, protocol-first UI, user interaction, Web Copilot standard, what ag