In noisy industrial environments, experienced engineers used to diagnose faults by hearing "squeaks," "clanks," or unusual hums. Today, AI sound recognition enables a more scalable, consistent method for equipment health monitoring, detecting these abnormal sounds in real time, without relying on human ears.

Enter AI—not as a replacement for ears, but as the "doctor" for machines.

It's time for algorithms to "understand" what the equipment is saying!

???? Why Use AI Sound Recognition for Predictive Equipment Health Monitoring?

Traditional predictive maintenance relies on temperature and vibration sensors, but sound monitoring offers unique advantages:

✅ 1. Non-Invasive Installation

No need to modify equipment structure or embed sensors—just place a microphone near the casing or workstation to capture key sound signals.

✅ 2. Detects More Details

Many early equipment failures—such as bearing looseness or impeller imbalance—first appear as subtle sound anomalies, making them ideal targets for anomaly detection using AI and machine learning models.

✅ 3. Low Cost, Quick Deployment

A sound capture and AI recognition system often requires only affordable sensors and an edge gateway or industrial PC to start upgrading maintenance.

???? How Does Sound Recognition Work?

Here's a simple flowchart to explain the "workflow" of equipment sound monitoring:

--- title: "AI Sound Recognition Maintenance Workflow" --- graph LR A[Equipment Operating Sound] --> B["Microphone (Mic/Accelerometer)"] B --> C[Local Capture System] C --> D["Preprocessing (Noise Reduction/Clipping/Gain)"] D --> E["Spectral Extraction (Mel Spectrogram/MFCC)"] E --> F["AI Model Judgment (CNN/Transformer)"] F --> G["Output: OK / Anomaly / Anomaly Type"] G --> H["Local Display + Report to Platform"]

???? How AI Analyzes Machine Sounds for Fault Detection?

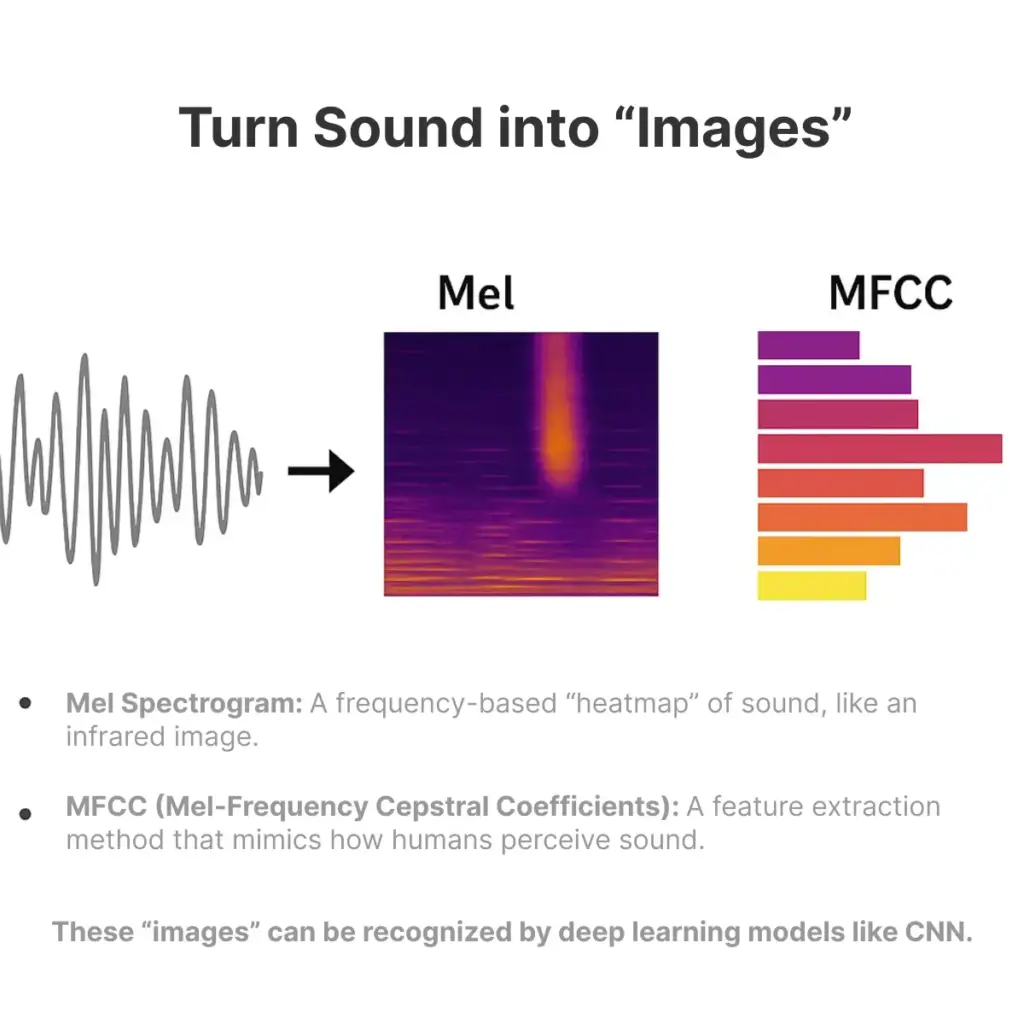

Sound is a "waveform" to humans, but to AI, it's a series of "images."

????️ 1. Turning Sound into "Pictures" — Mel Spectrogram / MFCC

• Mel Spectrogram is like a "heat map" that breaks down sound by frequency, similar to infrared imaging.

• MFCC (Mel-Frequency Cepstral Coefficients) extracts features that mimic human hearing.

• These "images" can be used by deep models like CNNs for recognition.

AI learns to distinguish "healthy breathing" from "abnormal moans" by recognizing these sound "snapshots."

???? 2. Choosing AI Models: CNN or Transformer?

These AI models are commonly used in machine listening and sound anomaly detection systems across industrial automation.

| Model Type | Features | Suitable Scenarios |

|---|---|---|

| CNN (Convolutional Neural Network) | Efficient, simple structure, fast training | Lightweight deployment, edge inference |

| Transformer (with Attention Mechanism) | Strong temporal modeling, suitable for long-term analysis | Large equipment, multi-frequency comprehensive recognition |

| LSTM/GRU (Recurrent Neural Network) | Strong temporal modeling, suitable for continuous sound input | Slow-changing anomaly perception in motor operation |

Recommended Strategy: Start with a CNN for the initial model, then introduce a Transformer to improve accuracy.

???? Example: Motor "Clanking," AI Alerts Immediately!

Scenario:

A high-speed fan on a production line occasionally makes a slight "clanking" sound, difficult for manual inspection to replicate.

Implementation Steps:

- Fix a microphone to the fan casing.

- Collect 100 hours of sound samples, manually label "normal" and "abnormal."

- Train using an MFCC+CNN model.

- Deploy the model locally on a PC, with recognition time <100ms.

- Real-time monitoring + misjudgment feedback + retraining mechanism.

Results:

• Recognition accuracy: 95.6%

• Detected loose fan bracket 4 days early, preventing spindle damage

• Reduced manual inspection time by over 90%

????️ Local Deployment vs. Cloud Deployment: How to Choose?

The deployment method of AI models directly affects data security, recognition speed, and system scalability. Here's a comparison:

| Comparison | Local Deployment (Recommended) | Cloud Deployment |

|---|---|---|

| Data Security | ✅ Local storage, no external upload | ⚠ Requires data upload, privacy risk |

| Recognition Latency | ✅ Millisecond response | ❌ Unstable network may cause delays |

| Training Method | Edge training possible (requires high-performance PC) | Strong cloud computing resources |

| Cost | Initial hardware cost higher | Long-term cloud service fees high |

| Network Dependency | Zero dependency | Strong network quality dependency |

For industrial automation use cases, especially those requiring fast and secure AI sound detection, we strongly recommend local private deployment to ensure on-site equipment health monitoring and meet data security standards.

???? Misjudgment Feedback Loop: Building AI's Self-Evolution Cycle

AI recognition models aren't static; environmental noise and equipment model differences can cause misjudgments. A mature system must have "self-correction ability."

Here's an effective "label-inference-misjudgment feedback-retraining" loop mechanism we've validated in multiple projects:

--- title: "Label / Misjudgment Feedback / Retraining Loop Flowchart" --- flowchart TD %% Labeling + Training Stage A1["Raw Data Collection (Sound / Vibration)"] A2["Upload to Platform and Preprocess"] A3["Manual Labeling OK / NG"] A4["Build Training Set"] A5["Initiate Model Training"] A6["Training Complete and Deploy Model"] %% Inference Recognition Stage (Encapsulated) subgraph Inference Recognition Stage B1["User Uploads Test Data"] B2["Execute Model Inference"] B3["Inference Result OK / NG + Confidence"] B4["User Review Result"] end %% Misjudgment Feedback Path C1["Misjudged Sample Returned (Misjudgment Feedback)"] C2["Re-listen + Re-label Misjudged Samples"] C3["Add Labeled Data to Training Set"] C4["Trigger Incremental Retraining"] %% Main Process Flow A1 --> A2 --> A3 --> A4 --> A5 --> A6 A6 --> B1 --> B2 --> B3 --> B4 %% Judgment Path B4 -->|Confirmed Correct| B1 B4 -->|Confirmed Misjudgment| C1 --> C2 --> C3 --> C4 --> A5

✅ Core Mechanism Explanation:

• All inference results come with confidence scores.

• Misjudgment thresholds or "manual review" status can be set.

• Administrators can merge misjudged samples into the training set with one click.

• System triggers retraining periodically or based on thresholds.

• Model versions are automatically archived, supporting switching and rollback.

In short: Every time it "mishears," the system gets smarter!

???? AI System Model Training and Inference Sequence Diagram

--- title: "AI System Model Training and Inference Sequence Diagram" --- sequenceDiagram participant Labeler participant Administrator participant Web Frontend participant Backend API participant Training Engine participant Model Service participant Database Labeler->>Web Frontend: Upload Sound Data Web Frontend->>Backend API: Save Raw File Backend API->>Database: Store Metadata Labeler->>Web Frontend: Start Labeling Interface Web Frontend->>Database: Fetch Audio & Display Waveform Labeler->>Web Frontend: Tag (OK/NG) Web Frontend->>Database: Save Labeling Results Administrator->>Web Frontend: Configure Model Type and Parameters Web Frontend->>Backend API: Submit Training Request Backend API->>Training Engine: Call Training Module (Including Data Preprocessing) Training Engine-->>Database: Fetch Data and Labels Training Engine-->>Training Engine: Execute Training, Log in Real-Time Training Engine->>Backend API: Return Training Complete Training Engine->>Model Service: Save as TorchScript / ONNX Tester->>Web Frontend: Upload New Sample Web Frontend->>Model Service: Call Inference API Model Service-->>Model Service: Load Current Model + Inference Model Service->>Web Frontend: Return Result OK / NG Web Frontend->>Database: Save Inference Record

• Covers 7 participant roles (user/system).

• Clearly marks:

• Upload data, tag labels → Store in database.

• Administrator configures parameters and initiates training → Training engine reads data and returns results.

• Inference stage uploads samples by testers → System infers and returns results → Stores in database.

???? Real-World Use Cases of AI Sound Recognition

AI sound recognition systems are not only suitable for automotive parts but have also been successfully applied in various industrial fields:

| Industry Equipment | Sound Issue | Recognition Effect | Cost Return |

|---|---|---|---|

| Pumps | Idle/Cavitation/Noise | OK/NG Accuracy > 94% | Saves 120,000 RMB in maintenance costs annually |

| Air Compressors | Valve Knocking, Leakage | Anomaly Recognition Rate Tripled | Reduces Downtime by 30% |

| Fans | Slight Noise Before Bearing Failure | Alerts 4-7 Days Early | Reduces Main Shaft Replacement Frequency |

| Motors | Stator Imbalance, Overheat Whistle | Fault Judgment Accuracy 92% | Replaces Manual Inspection, Saves Labor |

???? Recommended System Configuration (Local Deployment)

If you want to independently deploy a sound recognition system on-site at a factory, consider the following hardware and software configuration:

| Category | Recommended Configuration |

|---|---|

| Industrial PC | Intel i5/i7 + 16GB RAM + 512GB SSD |

| Sound Capture | MEMS Microphone / Accelerometer + USB Capture Card |

| Software Architecture | Vue3 + FastAPI + PyTorch + PostgreSQL |

| Inference Speed | Single Sample Inference < 200ms |

| Storage Capacity | Can Accommodate 100,000 Labeled Samples + Multiple Model Versions |

This system supports "offline training + online inference" mode, completing automatic recognition and continuous learning without relying on the public network.

???? Project Implementation Flow Suggestions

Implementing an AI sound recognition system from concept to deployment isn't "one step at a time" but can be done through a "quick validation → small batch pilot → full deployment" strategy:

???? Three-Phase Implementation Roadmap:

--- title: "AI Sound Recognition Project Implementation Flowchart" --- graph TD; A[Project Kickoff] --> B[Data Collection and Manual Labeling] B --> C[Prototype System Development] C --> D[AI Model Training and Validation] D --> E[Small-Scale Pilot Deployment] E --> F[Misjudgment Feedback Loop Optimization] F --> G[System Productization + Multi-Line Expansion] G --> H[Continuous Monitoring and Retraining]

???? Model Fine-Tuning and Data Augmentation Suggestions

✅ How to Improve Model Performance?

- Fine-Tuning Strategy:

• Use pre-trained CNN structures (e.g., ResNet) + freeze lower layers + custom classification head.

• Set layered learning rates: lower for base layers, higher for the head.

- What to Do When Anomalous Samples Are Scarce?

• Data Augmentation: Add noise, change speed, simulate anomalies (e.g., knocks, friction).

• SMOTE Resampling: Generate similar anomalous samples to address class imbalance.

- Heterogeneous Device Generalization Problem?

• Use device IDs as additional input labels.

• Employ multi-task learning mechanisms to enhance model "adaptability."

???? Deployment Recommendations and Team Role Assignment

???? Recommended Team Configuration:

| Role | Responsibilities |

|---|---|

| Product Manager | Define business scenarios, determine anomaly types and handling mechanisms |

| AI Engineer | Model design and training optimization |

| Backend Engineer | Build inference services, schedule tasks, manage data |

| Frontend Engineer | Implement visualization interface and labeling tools |

| Equipment/Quality Engineer | Participate in misjudgment confirmation and anomalous sound sample labeling |

????️ Recommended System Deployment Plans:

• Single Device Version (e.g., Local Industrial PC): Suitable for local pilot or production line testing.

• LAN Deployment (Edge Server): Supports multi-device data aggregation and unified recognition.

• Private Cloud Deployment: Provides centralized management, remote access, and scheduled training capabilities.

???? Frequently Asked Questions (FAQ)

Q1: Is the system suitable for complex noise environments?

A: Yes, through noise reduction, feature extraction, and model training, AI can effectively distinguish target sounds from background noise.

Q2: Can a model be trained with very few anomalous samples?

A: Yes, using a combination of "normal samples + anomaly augmentation + anomaly sampling expansion" strategies, with confidence-adjusted model thresholds.

Q3: Can it recognize multiple anomaly types?

A: Absolutely, the system supports multi-class classification models and can also integrate multiple models.

???? Conclusion: Sound is the Most Direct "Life Signal" of Industrial Equipment

When AI starts to "understand" the sounds of equipment, it becomes your most loyal inspector, the most sensitive alarm, and the most reliable guardian.

By combining sound perception, AI recognition, and feedback loop optimization, we've built a truly deployable and continuously optimizable equipment health detection system. It not only saves labor costs but also gives equipment an "intelligent check-up" capability.

Start AI Sound Inspection with One Device

You can quickly start a pilot project with these three steps:

- Choose a typical device (e.g., fan, motor, pump).

- Collect 1-2 weeks of its sound samples.

- Build a minimum functional platform: upload + label + inference.

Effective pilot → Small batch promotion → System integration with MES / Maintenance platforms, gradually building your "Industrial Sound AI Network."

???? If you're interested in quickly building an AI sound recognition solution, feel free to leave a comment, message, or contact us for a complete automated equipment monitoring solution and deployment demo.