In an era where data and intelligence fuel cutting-edge innovations, the role of edge computing in powering real-time AI applications cannot be overstated. Traditional cloud architectures have driven significant advancements in data processing and model training over the past decade, yet the exponential growth of devices, sensors, and complex use cases has exposed vulnerabilities in centralized computing. High latencies, bandwidth constraints, and privacy concerns limit the potential of truly real-time AI systems.

Here enters edge computing—the practice of placing data processing resources closer to endpoints (sensors, cameras, IoT devices, and more). By leveraging edge compute nodes or local “mini data centers” near the source of data collection, industries can cut down on round-trip times to the cloud and thus reduce overall latency. According to Gartner, more than 75% of enterprise data will be created and processed outside centralized data centers by 2025, underlining the growing significance of edge intelligence.

In this blog, we examine how edge computing impacts real-time AI applications, covering the motivations behind edge strategies, essential use cases, architectural design patterns, challenges to consider, and future outlook. Whether you’re part of a startup exploring new hardware solutions or an established enterprise seeking to optimize mission-critical systems, understanding how edge computing elevates AI in real-time can give you a strategic advantage.

Defining Edge Computing and Real-Time AI

Edge Computing

Edge computing is a paradigm that pushes computation and storage closer to where data is generated. Instead of transmitting all information from local endpoints to a central cloud for processing, edge computing suggests installing edge nodes (e.g., micro data centers, on-prem servers, or specialized gateways) near IoT endpoints. These local nodes perform essential computations—like AI inference, data filtering, or event management—in near-real-time.

Key attributes of edge computing include:

- Proximity to data source: Minimizing physical distance between where data is generated and where it is processed.

- Reduced latency: Data does not need to travel across long network paths to a central server.

- Contextual intelligence: Local systems can customize data processing and decision-making for specific environments.

- Enhanced reliability: Local processing can continue functioning even if cloud connectivity fails or is intermittent.

Real-Time AI

Real-time AI refers to artificial intelligence systems that respond or infer insights immediately (or in extremely tight time windows), often within milliseconds. This concept extends beyond batch processing or delayed analysis, aiming to power critical applications in self-driving cars, robotic control, healthcare monitoring, and more.

Real-time AI requires:

- Low-latency data handling: Rapid ingestion, processing, and inference.

- Efficient model deployment: Scalable frameworks and optimized algorithms that operate quickly under constrained compute.

- High throughput: Handling continuous streams of sensor/camera data without bottlenecks.

When these two concepts—edge computing and real-time AI—converge, the result is Edge AI: a framework that processes high-volume, continuous data streams directly on local devices or close-proximity servers, delivering instantaneous (or near-instant) insights with minimal reliance on centralized resources.

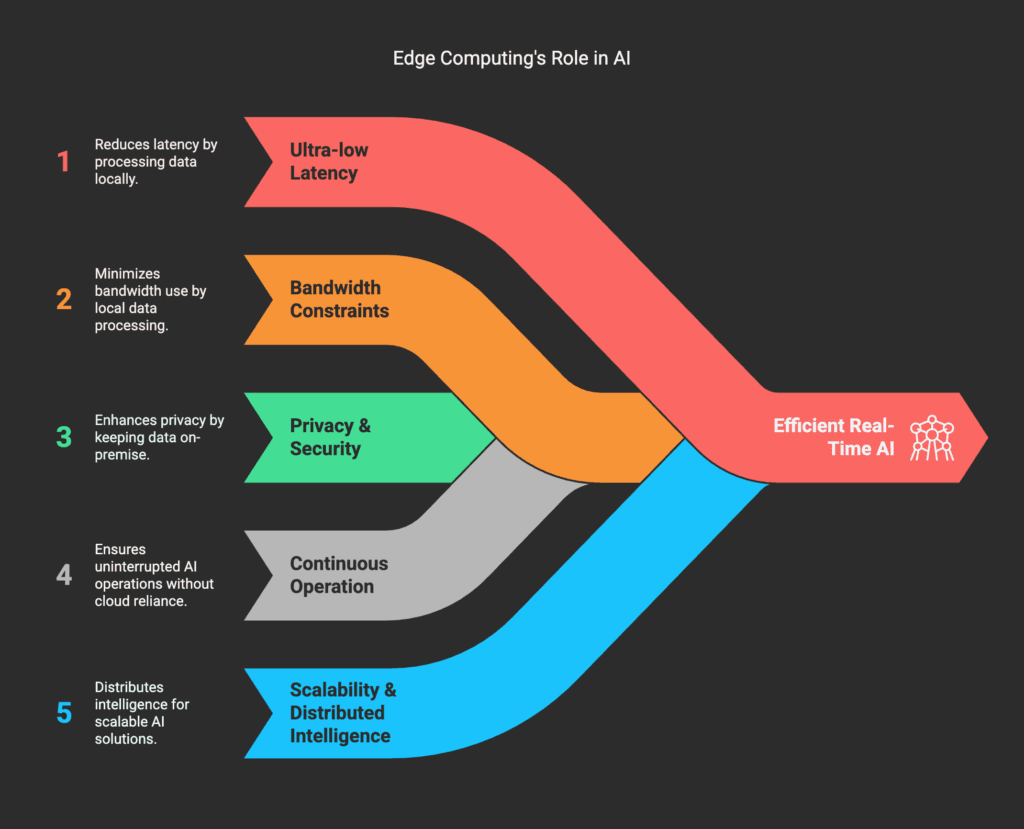

Why Edge Computing Matters for Real-Time AI

- Ultra-low Latency Requirements

Many real-time AI applications cannot tolerate the latency introduced by round-trip communication to a remote data center. For instance, an autonomous vehicle navigating dense traffic requires sub-100ms (or even sub-10ms) inference speeds for tasks like obstacle detection and route planning. By leveraging edge computing, these decisions can be processed locally, significantly reducing end-to-end delays. - Bandwidth and Network Constraints

When dealing with high-resolution video streams (e.g., 4K or 8K cameras) or massive sensor arrays, continuously uploading raw data to the cloud becomes prohibitively expensive. Edge computing allows for preprocessing or inference at the source, sending only relevant results (e.g., anomaly detections) to the cloud. This avoids straining network bandwidth and can also reduce operational costs. - Privacy and Security

Regulations such as GDPR, HIPAA, and various data protection laws emphasize limiting data transfer outside its region of origin. Edge computing helps maintain privacy by performing initial or complete AI inference on-premise, reducing the risk of exposing sensitive data over public networks. It also allows for secure enclaves or hardware-based encryption to remain physically under an organization’s control. - Continuous Operation

Real-time AI systems often run 24/7 under dynamic or mission-critical conditions—think industrial robots on a factory floor, real-time patient monitoring, or street surveillance cameras. If connectivity to the cloud is interrupted, it can disrupt vital functions. With an edge-centric setup, applications can continue to operate autonomously, ensuring business continuity. - Scalability and Distributed Intelligence

Instead of funneling all computing power into a single remote cluster, edge computing spreads intelligence across multiple micro data centers or edge nodes. This distributed model is especially powerful for large-scale IoT deployments, as it can handle data surges locally and collectively balance workloads across edge and cloud resources.

Key Technologies Driving Edge AI

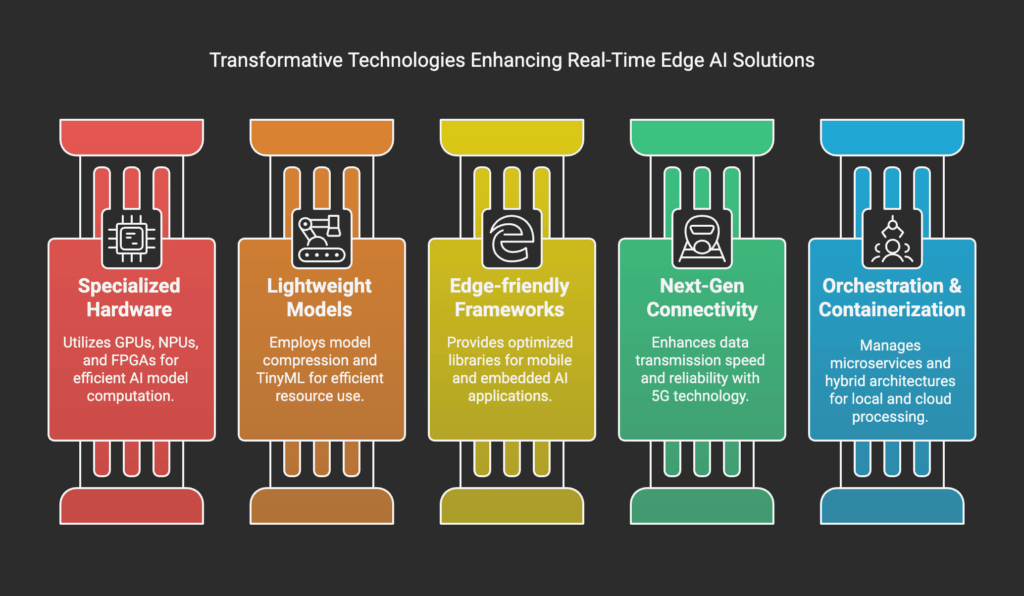

Edge computing wouldn’t have the transformative impact it does on real-time AI without several underlying technologies. Let’s explore some enablers:

- Specialized Edge Hardware

- Graphics Processing Units (GPUs) at the edge for parallel computation of large AI models.

- Neural Processing Units (NPUs) or AI Accelerators specifically designed for deep learning inference (e.g., Google Coral, Intel Movidius).

- Field-Programmable Gate Arrays (FPGAs) offering flexible, low-latency pipelines and power efficiency.

- Lightweight AI Models

- Model Compression: Techniques like pruning, quantization, and knowledge distillation slim down neural networks.

- TinyML: A subset of machine learning dedicated to extremely low-footprint models suitable for microcontrollers.

- Edge-friendly Frameworks

- TensorFlow Lite: Google's library tailored for mobile and embedded devices.

- ONNX Runtime: Supports multiple hardware backends and model formats.

- PyTorch Mobile: Offers optimized runtime for PyTorch models on mobile and embedded platforms.

- 5G and Next-Gen Connectivity

- Faster wireless technologies reduce the time it takes to transmit data from devices to edge nodes.

- Network slicing and ultra-reliable low-latency communication (URLLC) features support mission-critical, real-time AI tasks.

- Orchestration and Containerization

- Using Docker or Kubernetes on the edge to manage microservices, scale horizontally, and integrate seamlessly with the cloud.

- Hybrid architectures ensuring that data is processed locally when needed and aggregated in the cloud for further analytics or model retraining.

Each of these technologies amplifies the real-time capabilities of edge-based AI solutions. They address the constraints of limited on-site compute, connectivity reliability, and latency demands inherent in modern AI-driven tasks.

Use Cases and Industry Applications

1. Autonomous Vehicles and Transportation

Autonomous cars, delivery drones, and intelligent traffic systems demand split-second decision-making. By processing sensor data (LiDAR, radar, cameras) at the edge—often within the vehicle’s onboard computer—vital safety decisions aren’t hindered by unreliable or high-latency cloud connections. Furthermore, real-time object detection and path planning ensure safer navigation in dynamic environments.

2. Industrial Automation (Industry 4.0)

Factories rely on real-time AI to optimize assembly lines, predict equipment failures (predictive maintenance), and control robotic arms. By deploying edge servers right on the factory floor, manufacturers can detect anomalies in machinery data instantly, preventing costly downtimes. IDC predicts that by 2025, half of all Industry 4.0 solutions will incorporate edge-based AI for reactive and predictive tasks.

3. Healthcare and Patient Monitoring

Real-time medical diagnostics—such as analyzing patient vitals in intensive care—requires immediate feedback. Edge computing can analyze ECG streams, vital sign sensors, or medical imaging data in seconds, alerting healthcare professionals to emergencies. This localized approach also helps maintain compliance with privacy regulations, ensuring that only anonymized or crucial data is sent to central cloud servers.

4. Retail and Smart Spaces

Edge-based cameras and sensors can perform instant crowd analytics, queue management, and inventory checks in retail stores without sending raw footage to the cloud. Likewise, smart buildings can leverage local edge servers to control HVAC systems in real time, optimizing energy consumption based on occupant behavior.

5. Public Safety and Smart Cities

Security cameras outfitted with real-time AI can detect suspicious activities, identify accidents, or monitor traffic congestion. Cities with large-scale camera networks or environmental sensor grids benefit from localized analytics, which can rapidly trigger alarms or direct emergency services. This approach eases the burden on cloud infrastructure and preserves bandwidth for only the most critical transmissions.

6. Farming and Agriculture

Smart farming employs drones and ground-based sensors that monitor crop health, soil moisture, or weather changes. Edge processing enables immediate decisions—like adjusting irrigation or applying fertilizer—without waiting for cloud feedback. This is crucial in remote fields with limited connectivity.

Each of these examples demonstrates edge computing’s essential role in enabling real-time or near-real-time AI. Without local processing, the latencies and network complexities could severely degrade the effectiveness and safety of these applications.

Technical Architectures and Data Flows

Real-time AI at the edge typically adopts a multi-layer architecture, balancing local inference with optional cloud collaboration for more resource-intensive tasks (like model training or global data aggregation). A common pattern looks like this:

- Data Generation

Sensors, cameras, and IoT devices collect raw information—images, signals, logs. - Edge Node / Gateway

A local device with compute capabilities (CPU/GPU/NPU) receives data from endpoints. AI inference (e.g., object detection, predictive analytics) is executed immediately. - Local Action

If an anomaly or critical event is detected (say, a manufacturing defect or a medical emergency), the edge node triggers real-time alerts or physical actuations (e.g., robotic arm stops, alarm system activates). - Aggregation and Cloud Sync

Summarized results, metadata, or non-urgent data is sent to a central cloud for long-term storage, advanced analytics, or model retraining. - Model Updates

Periodically, the cloud trains advanced AI models on aggregated global data. Updated models are then deployed back to the edge node, ensuring local intelligence remains current without saturating the edge device’s compute resources during the training phase.

Mermaid Diagram: A Typical Edge AI Workflow

flowchart LR A[Data Sources(Sensors/Cameras)] --> B[Edge Node(Local AI Inference)] B --> C[Immediate Actions(Alerts/Controls)] B --> D[Relevant Data Sent to Cloud] D --> E[Model RetrainingBig Data Analytics] E --> B[Model Updates(Deployment to Edge)]

Explanation

- A → B: Raw data is collected from distributed sensors and passed to the local edge node.

- B → C: The edge node runs real-time inference, triggering instant control or alerts.

- B → D: Only the essential data points or aggregated analytics get sent to the cloud.

- D → E: The cloud handles deeper analytics, such as generating insights from large historical datasets or retraining AI models.

- E → B: The improved model is pushed to the edge environment to continuously refine local inference.

Table: Comparing Edge vs. Cloud for Real-Time AI

Below is a concise table demonstrating the key differences between edge computing and cloud computing in real-time AI contexts:

| Aspect | Edge Computing | Cloud Computing |

|---|---|---|

| Latency | Extremely low; data processed locally | Higher; dependent on network round-trip times |

| Bandwidth | Minimal data transfer; only relevant outputs shared | Potentially high; raw data must be uploaded |

| Scalability | Limited by on-site hardware, but can scale via multiple distributed nodes | Virtually unlimited compute in large data centers |

| Reliability | Can operate offline or with intermittent connectivity | Dependent on stable Internet connectivity |

| Data Privacy | Reduced exposure; sensitive data stays on-prem | Risks of storing/processing sensitive data off-site |

| Cost Model | Upfront investment in local infrastructure | Pay-as-you-go for compute and storage |

| Model Training | Less suited for large-scale training | Ideal for big data analytics and deep model training |

| Use Cases | Time-critical applications (e.g., robotics, safety) | Batch analytics, large-scale data mining & archiving |

Challenges and Considerations

While the benefits of edge computing for real-time AI are significant, there are notable challenges:

- Hardware Constraints

Edge devices often have limited CPU/GPU capacity and constrained memory footprints. Engineers must optimize AI models (through quantization or pruning) so that local inference remains efficient. - Edge Security

Placing compute resources in the field can expose them to tampering or unauthorized access. Physical security, encryption, and secure boot processes are necessary to protect both hardware and data. - Ecosystem Fragmentation

The edge landscape is diverse, with a variety of hardware vendors, operating systems, and protocols. Achieving interoperability requires standardization, containerization, or frameworks like LF Edge or EdgeX Foundry. - Maintainability and Updates

In large-scale deployments, managing edge nodes scattered across different sites is challenging. Automated update mechanisms (OTA—Over The Air updates) and remote device management strategies are essential to ensure consistent model versions and security patches. - Cost-Benefit Analysis

While edge systems can reduce bandwidth costs, they require up-front investment in localized hardware. A thorough ROI analysis should compare potential latency improvements, security gains, and operational resilience against hardware and maintenance expenses. - Regulatory Compliance

Local data processing might help with GDPR or HIPAA compliance, but there is still a need to validate that the entire pipeline (including partial cloud integrations) adheres to relevant regulations.

Strategies for Implementation

1. Hybrid Model Deployment

Deploy “lightweight” or “distilled” models on edge nodes for real-time inference, while maintaining more computationally heavy training or high-accuracy analysis in the cloud. This approach ensures continuous improvement of models using global data without overwhelming on-site resources.

2. Containerization

Use Docker or Kubernetes on edge nodes (where feasible) to standardize deployment. Containers encapsulate dependencies, simplifying updates and scaling. Smaller orchestrators (like K3s) can handle resource-constrained devices.

3. Edge-Cloud Collaboration

Instead of an either-or approach, leverage the cloud for large-scale data aggregation, advanced analytics, or training. Meanwhile, keep only critical inference tasks at the edge, ensuring quick response times.

4. Security by Design

Implement end-to-end encryption, secure enclaves (e.g., Intel SGX), or hardware-based root of trust. Regularly audit edge devices for vulnerabilities and maintain strict access control. This is especially critical for remote or public-facing edge nodes.

5. Monitoring and Logging

Use specialized logging frameworks that can run locally while buffering or batching logs to the cloud. Real-time analytics at the edge can detect anomalies and create alerts without flooding your WAN or cloud environment with raw logs.

6. AI Model Lifecycle

- Data Capture: Gather real-time data at the edge, store locally if needed.

- Model Training: Typically done in the cloud on large, aggregated datasets.

- Model Optimization: Prune or quantize for edge deployment.

- Continuous Integration/Continuous Deployment (CI/CD): Automate model testing and rollout to edge devices.

- Monitoring: Track inference accuracy and performance at the edge.

A structured lifecycle ensures that real-time AI models remain both high-performing and maintainable across distributed environments.

Future Trends and Market Outlook

The synergy between edge computing and AI is fueling various predictions from market analysts. Here are some forward-looking insights:

- Wider Adoption of TinyML

TinyML will enable advanced neural network inference on microcontrollers with sub-1mW power usage, opening up real-time AI for battery-operated devices such as wearables, remote sensors, and more. - 5G and 6G Evolution

As 5G networks roll out globally, and even 6G research accelerates, ultra-reliable low-latency communication (URLLC) becomes a standard feature. These next-gen networks will further reduce the time it takes to offload or share data between devices and edge nodes, amplifying real-time AI capabilities. - Expansion into New Verticals

Besides automotive and industrial automation, we’ll see a surge in edge AI for precision agriculture, telemedicine, space tech, and energy management—all of which involve immediate data-driven actions. - Edge-Cloud Governance

As the number of edge nodes soars, orchestrating large fleets will demand robust governance platforms. These platforms will unify security policies, update schedules, compliance checks, and model performance tracking across thousands or millions of geographically dispersed endpoints. - Autonomous Drones and Robotics

Drones used for delivery, inspections, or aerial analytics can rely on onboard edge computing to avoid collisions, recognize landmarks, and adapt to changing conditions in real time. The same goes for industrial robots that must quickly respond to changes in a dynamic environment. - New Business Models

Edge computing providers may offer edge-as-a-service, where enterprises rent local processing capacity near their facility or region. This pay-as-you-go edge model reduces up-front costs and lowers barriers for real-time AI adoption.

According to IDC, the global edge computing market is projected to exceed $250 billion by 2026, with a significant portion dedicated to AI and IoT-related deployments. As organizations realize the value of immediate analytics and robust data sovereignty, the demand for real-time AI at the edge will only accelerate.

Edge computing is more than just a buzzword—it represents a fundamental shift in how we design, deploy, and scale AI solutions. By placing computational resources closer to data sources, real-time AI becomes not only feasible but highly efficient for time-critical applications. Whether it’s an autonomous vehicle detecting obstacles at breakneck speeds, a manufacturing plant that cannot afford to wait on remote servers for anomaly detection, or a remote telemedicine solution ensuring immediate diagnostics, the power of edge computing is unlocking new frontiers in responsiveness, security, and cost-effectiveness.

Key takeaways:

- Low Latency: Edge architectures can drastically reduce end-to-end response times, enabling split-second decisions in real-world environments.

- Efficient Resource Utilization: By offloading tasks to local nodes, organizations conserve bandwidth and optimize cloud usage.

- Improved Privacy and Reliability: Sensitive data can remain on-premises, and local inference can continue even during network outages.

- Evolving Ecosystem: Hardware accelerators, model optimization techniques, and orchestration platforms are rapidly maturing, making edge deployments more accessible.

For businesses aiming to harness the full power of AI in real-time, edge computing is an essential strategy. It bridges the gap between raw data streams and actionable intelligence, shaping how next-generation innovations will operate—quickly, securely, and efficiently. Embracing this architectural mindset today will prepare organizations for the data-driven realities of tomorrow, positioning them at the forefront of technological leadership and market competitiveness.