With the deepening integration of artificial intelligence in sensitive fields such as healthcare, finance, retail, and speech recognition, traditional centralized modeling methods face unprecedented challenges:

- ???? Data cannot be centrally shared: Medical institutions and enterprises are constrained by compliance regulations (such as GDPR and HIPAA) that prohibit uploading data to the cloud.

- ???? Privacy protection has become a core requirement: More users expect AI services without exposing their data.

- ???? Rise of intelligent terminal devices: Mobile phones and IoT devices have become significant data sources, and models should follow the data.

Against this backdrop, Federated Learning (FL) has become a widely recognized solution. It allows data to remain local and uses model parameter exchanges to complete collaborative training—balancing model performance with privacy protection, and becoming a foundational architecture for the future of AI.

I. What is Federated Learning?

Federated Learning is a distributed machine learning framework. Its core idea is to collaboratively build a global model without sharing original data by distributing the model training process to multiple local devices or servers. This method was first proposed by Google in 2016 to address issues of data silos and privacy protection.

Compared to traditional centralized machine learning, federated learning features:

- Data Localization: Data remains on local devices, eliminating network transmission risks.

- Model Sharing: Participants only share model parameters or gradients, not original data.

- Privacy Protection: Enhanced privacy through differential privacy and encrypted computations.

This framework is especially suitable for sectors like healthcare, finance, and mobile devices where data privacy is paramount.

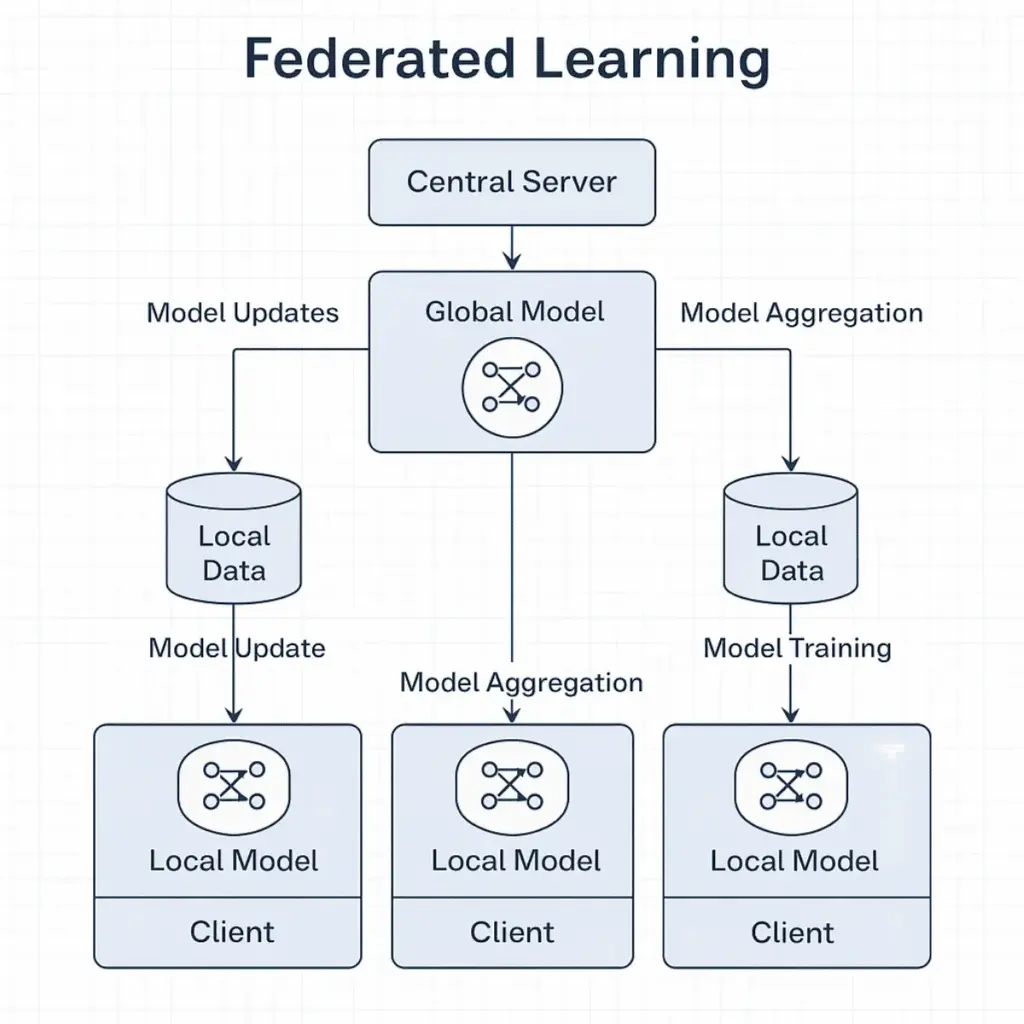

Workflow of Federated Learning

The typical workflow of federated learning includes:

- Initialize Global Model: A central server initializes and distributes the global model to participants.

- Local Model Training: Participants train the received model using local data.

- Upload Model Updates: Participants send their local updates (gradients or weights) back to the server.

- Aggregate Model Updates: The central server aggregates these updates (e.g., weighted averaging) to update the global model.

- Iterative Training: Repeat the process until convergence or a predefined number of rounds.

This process ensures collaborative training while maximally protecting data privacy.

Federated Learning System Architecture

Federated learning typically comprises three types of nodes:

1️⃣ Central Coordination Node (Server)

- Distributes the initial model

- Collects model updates from clients

- Performs model aggregation algorithms (e.g., weighted averaging)

- Pushes the updated global model

2️⃣ Edge Clients

- Store local data (e.g., smartphones, hospital databases, bank terminals)

- Conduct local training

- Upload model weights or gradients

3️⃣ Secure/Intermediate Proxy (optional)

- Provides parameter encryption, authentication, and anonymization

- Prevents leakage of client identities or model parameter details

???? One of the design goals for federated learning is compatibility with heterogeneous devices, requiring the architecture to support:

- Varying network latencies

- Diverse computational capabilities

- Client reconnection mechanisms

Data Privacy Protection Mechanisms

Federated learning isn't inherently secure; it still faces attack risks such as inference attacks from model updates. Thus, it must incorporate privacy-enhancing technologies:

???? 1. Differential Privacy (DP)

Noise is added to gradients or parameters, preventing data reconstruction.

| Advantages | Challenges |

|---|---|

| Clear mathematical privacy guarantees | Impacts model convergence accuracy |

???? 2. Secure Multi-Party Computation (SMPC)

Joint computation without revealing private data, such as aggregation using Shamir Secret Sharing.

| Advantages | Challenges |

|---|---|

| Encrypted model updates prevent interception | High communication overhead, suitable for high-security scenarios |

???? 3. Homomorphic Encryption

Encrypted model parameters can still be mathematically operated on without decryption.

| Advantages | Challenges |

|---|---|

| Very high privacy guarantees | Significant computational costs, unsuitable for lightweight devices |

???? Combined solutions (e.g., FedAvg + DP + SMPC) are mainstream choices in industrial deployments, balancing performance and security.

II. Federation AI: A Privacy-First Approach to Machine Learning

Federation AI refers to artificial intelligence systems built on federated learning principles—where models are trained across distributed data sources without moving the raw data.

Unlike traditional centralized AI systems that collect all data in one place, federation AI uses on-device or on-premise training to ensure data stays secure and private. This makes it ideal for regulated industries like healthcare, finance, and smart IoT environments.

Federation AI is not just a trend—it's a key shift in how we build AI systems that are scalable, compliant, and privacy-aware.

2.1 Federated Learning in Healthcare: Secure AI for Sensitive Data

Federated learning in healthcare is one of the most impactful use cases of this technology. Medical institutions—like hospitals, research labs, and pharmaceutical companies—can collaboratively train AI models using electronic health records (EHRs), medical images, or genomic data without ever sharing patient data.

Here’s how it works:

- Each hospital trains the model on its local data.

- Only model parameters are sent to a central server for aggregation.

- The result: a powerful, privacy-compliant global AI model.

✅ Why Healthcare Needs Federated Learning

- Protects sensitive patient information (HIPAA/GDPR compliant)

- Enables cross-institutional collaboration

- Improves disease prediction and diagnostics

- Reduces legal and ethical risks

Whether used for early cancer detection, MRI image analysis, or COVID-19 patient monitoring, federated learning in healthcare is paving the way for smarter, safer medical AI solutions.

III. Basic Principles and System Architecture of Federated Learning

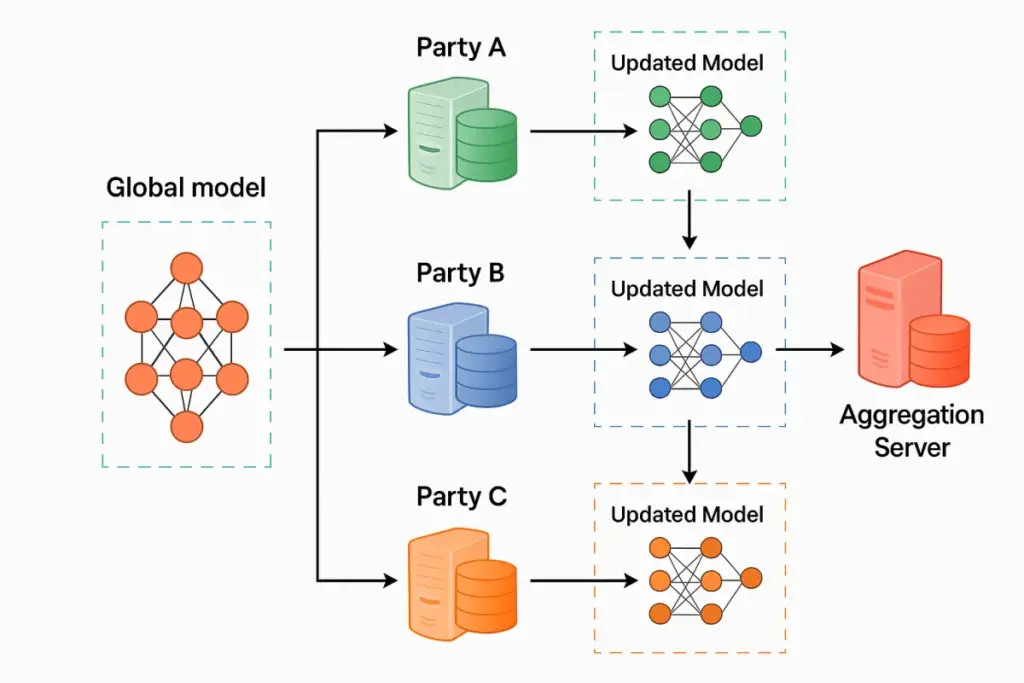

3.1 Definition

Federated learning is a decentralized machine learning framework enabling multiple data holders to collaboratively train a model without sharing raw data. Its core principle is:

Bring the model to the data for training instead of uploading data to the model.

Thus, data remains local, and only model updates (parameters, gradients) are aggregated into a global model.

3.2 Standard Training Workflow

A typical federated learning training process involves:

- Server initializes the global model

- Selects a batch of clients for training

- Distributes the model to clients

- Clients train the model locally

- Clients upload updated model parameters

- Server aggregates client parameters and updates the global model

- Repeat until convergence

???? Mermaid Sequence Diagram:

sequenceDiagram participant Server participant Client1 participant Client2 participant Client3 Server->>Client1: Distribute initial model Server->>Client2: Distribute initial model Server->>Client3: Distribute initial model Client1-->>Server: Upload locally trained model Client2-->>Server: Upload locally trained model Client3-->>Server: Upload locally trained model Server->>All: Aggregate and create a new model

3.3 Advantages Summary

| Advantage | Description |

|---|---|

| Privacy Protection | Data is not transmitted, inherently compliant |

| High Data Usability | "Data remains stationary, model moves" practical |

| Strong Scalability | Easily deployed to tens of thousands of devices |

| Supports Heterogeneous Devices | Deployable across mobile, edge, and servers |

3.4 Recommended Federated Learning Frameworks

| Framework | Developer | Features |

|---|---|---|

| TensorFlow Federated | Deep integration with TensorFlow, research-oriented | |

| PySyft | OpenMined | Supports DP, SMPC, ideal for labs and education |

| FATE | Webank | Industrial-grade FL platform, various modes |

| Flower | Open source | Modular design, supports PyTorch/TensorFlow, quick prototyping |

IV. Practical Example: Federated Fine-tuning of Whisper Model with Flower

4.1 Case Background

Whisper, a multilingual automatic speech recognition (ASR) model by OpenAI, excels in transcription and translation. However, deployment challenges include:

- Different units holding diverse speech data (dialects, accents, jargon)

- Sensitive data preventing centralized training

Federated learning is ideal for cross-institutional speech recognition fine-tuning, improving local adaptation.

4.2 Flower Framework Overview

Flower is a general, modular federated learning framework compatible with PyTorch, TensorFlow, JAX, etc. Its advantages:

- ✅ Supports horizontal federated learning

- ✅ Customizable training logic and aggregation strategies

- ✅ Supports client simulation and local multi-instance debugging

- ✅ Integrable with differential privacy and encryption mechanisms

Frameworks supported by Flower:

| Deep Learning Framework | Supported |

|---|---|

| PyTorch | ✅ Fully Supported |

| TensorFlow / Keras | ✅ Supported |

| JAX / NumPy | ✅ Supported |

| Scikit-learn | ✅ Lightweight model |

| Hugging Face Transformers | ✅ PyTorch integration |

4.3 Whisper Federated Training Project Structure

???? whisper-federated-finetuning/

├── client/

│ ├── client.py # Flower client logic

│ └── trainer.py # Model training functions

├── server/

│ └── server.py # Flower server and strategy config

├── dataset/

│ └── utils.py # Data handler (audio/labels)

└── requirements.txt4.4 Key Client-side Code Snippets

class WhisperClient(fl.client.NumPyClient):

def get_parameters(self, config):

return get_model_weights(model)

def fit(self, parameters, config):

set_model_weights(model, parameters)

train(model, local_loader)

return get_model_weights(model), len(local_data), {}

def evaluate(self, parameters, config):

set_model_weights(model, parameters)

loss = evaluate(model, test_loader)

return float(loss), len(test_loader.dataset), {}???? Explanation:

get_parameters()returns the current model parameters.fit()local training with local audio data.evaluate()evaluates the model and returns loss values.

4.5 Server Aggregation Setup

Flower provides flexible aggregation strategies like FedAvg:

strategy = fl.server.strategy.FedAvg(

fraction_fit=0.5,

min_fit_clients=3,

min_available_clients=5,

on_fit_config_fn=load_training_config

)

fl.server.start_server(

server_address="0.0.0.0:8080",

config=fl.server.ServerConfig(num_rounds=10),

strategy=strategy,

)???? Explanation:

- FedAvg strategy aggregates client updates.

- Adjustable client sampling proportion and training rounds.

- Global model optimization through aggregation rounds.

V. Key Mechanisms and Optimization Strategies: Building Efficient FL Systems

5.1 Deep Dive into Aggregation Algorithms

While FedAvg is the standard aggregation strategy, real-world applications might benefit from enhanced versions:

| Strategy | Principle | Suitable Scenario |

|---|---|---|

| FedAvgM | Adds momentum to improve stability | Non-convex optimization issues |

| FedProx | L2 regularization to prevent drift | Non-IID with divergent updates |

| FedYogi / FedAdam | Mimics gradient optimizers | Difficulties in optimizing models |

| FedBN | Keeps BatchNorm layers client-specific | Image tasks with Non-IID data |

???? Flower supports these via overriding strategy.aggregate_fit().

5.2 Asynchronous Communication and Fault Tolerance

Real-world clients face issues such as:

- ✖️ Offline/instability (IoT devices)

- ✖️ Large latency discrepancies

Solutions include:

- Using FedAsync or Staleness-aware Aggregation strategies

- Implementing timeouts with

min_available_clients - Deferring slow clients (Weighted Debias)

5.3 Model Personalization

Clients might require partial model customization, e.g.,:

- Specific accent adaptation (speech tasks)

- Fine-tuning in finance or healthcare domains

Strategies:

- Freeze shared layers, open tail layers

- Meta-learning methods (pFedMe, FedPer)

Flower allows client-specific parameter definition:

def get_model_weights(model):

return [param for name, param in model.named_parameters() if "head" not in name]VI. Advanced Features and Strategy Optimizations: Making Federated Learning More Practical

Federated learning requires flexible strategies to address real-world issues like Non-IID data, varying data sizes, and unstable training.

6.1 Types of Heterogeneity

| Type | Description | Example |

|---|---|---|

| Label Distribution Shift | Different label samples per client | Hospital A (elderly), Hospital B (children) |

| Feature Shift | Same labels, different features per client | Speech features from different microphones |

| Imbalanced Samples | Varying amounts of data per client | Client A (thousands), Client B (hundreds) |

6.2 Technical Strategies

| Strategy | Core Idea | Supported Frameworks |

|---|---|---|

| Data Augmentation | Generate synthetic samples | Customizable data loaders (Flower) |

| Regularization | Add L2 regularization (FedProx) | Flower/FATE |

| Client Weighting | Reduce small-sample client impact | Default aggregation strategy |

| Meta-learning methods | Adjust model structures (pFedMe) | Customizable client logic |

| Layer Customization | Shared backbone, custom top layers | Freezing model parameters |

6.3 Advanced Features and Strategies

✅ Federated Personalization

Clients can have "shared + customized" model structures, allowing:

- Common speech recognition capabilities

- Private fine-tuning for specific accents or terminology

???? Flower supports defining fit() for layer freezing/training.

✅ Differential Privacy

Federated learning can still leak information through gradients. Flower integrates DP libraries like PySyft, Opacus:

from opacus import PrivacyEngine

model = ...

privacy_engine = PrivacyEngine(model, batch_size=64, sample_size=1000, alphas=[10], noise_multiplier=1.0, max_grad_norm=1.2)

privacy_engine.attach(optimizer)✅ Secure Aggregation

For highly secure scenarios, Flower supports secure aggregation:

- Encrypt model parameters with homomorphic encryption

- Server aggregates without decrypting

Integrate third-party encryption libraries (TenSEAL, PySyft).

✅ Supporting Imbalanced Clients (Asynchronous Optimization)

Due to client diversity:

- Clients may participate infrequently

- Devices may have slow training and response

Solutions:

- FedAsync/FedAvgM asynchronous strategies

- Control stability with

min_fit_clients,min_eval_clients - Limit resources per client with

client_resources

6.4 Deployment Architecture Recommendations

???? Recommended architecture:

graph TD DevOps[DevOps] --> API[Training Control API] API --> FLServer[Flower Server] FLServer --> C1[Client: Hospital A] FLServer --> C2[Client: Hospital B] FLServer --> C3[Client: University C] FLServer --> Monitor[Monitoring Module]

Engineering Checklist:

- Docker Compose/Kubernetes orchestration

- MLflow/WandB for metric tracking and versioning

- TLS + authentication for client protection

- Evaluation queues by region/institution for model generalization

VII. Summary and Developer Recommendations

Federated learning is no longer just academic; it's becoming an essential part of industrial and commercial AI deployments.

Practical Recommendations for Developers:

| Scenario | Recommended Practice |

|---|---|

| Rapid Prototyping | Flower local simulation environment |

| Cross-platform Training | PyTorch Lightning + Flower |

| Multilingual Tasks | Whisper + HuggingFace + FL |

| Industrial Deployment | FATE with SMPC/DP modules |

| High Heterogeneity | pFedMe / FedBN / local freezing layers |

???? Further Reading & Tools:

- ???? Flower Official Docs

- ???? Whisper Federated Fine-tuning: Flower Examples

- ???? PySyft: GitHub - OpenMined/PySyft

- ???? Opacus (PyTorch DP Tool): [GitHub - pytorch/opacus

Explore the core principles of federated learning, with practical solutions using Flower framework and Whisper model. Learn how to implement AI while ensuring data privacy. [Contact us to get started!]