ESP32-S3 is widely used in embedded AI and voice-enabled devices due to its integrated AI acceleration and low-power design.

This article explores how TensorFlow Lite Micro can be deployed on ESP32-S3 to enable real-world edge AI use cases such as wake word detection, local perception, and on-device inference. These techniques are commonly applied in custom ESP32 hardware development and production-grade embedded systems.

When voice chips are no longer the only choice, how can general-purpose MCUs enable on-device AI perception?

1. Why Run AI on MCUs? ESP32-S3 as an Edge AI Platform

ESP32-S3 TensorFlow Lite Micro enables edge AI wake word detection by running lightweight neural networks directly on embedded devices.

1.1 The stagnation of traditional wake word systems

From smart speakers and vacuum robots to wearables and home appliances,

wake word detection is now a standard feature, not a premium capability.

Most of these systems rely on dedicated voice chips such as ASR5505, BD3751, or XMOS XVF series.

They are optimized for ultra-low power consumption and include built-in keyword engines — developers simply configure a wake command to enable voice control.

However, these solutions come with growing limitations:

- Wake words are fixed and cannot be modified dynamically.

- Firmware and model updates depend on chip vendors.

- No flexibility for personalized or multi-language wake words.

As voice interaction becomes a baseline requirement,

this hardware-level inflexibility has become a bottleneck for innovation.

1.2 The rise of MCU + AI frameworks

Run lightweight AI models directly on general-purpose MCUs for local inference and decision-making.

ESP32-S3 strikes a balance between compute capability, power efficiency, and cost, making it suitable for embedded edge AI workloads where cloud inference is impractical. With advancements in microcontroller design and neural network frameworks, a new trend is emerging:

A prime example of this shift is ESP32-S3 TensorFlow Lite Micro (TFLM).

- ESP32-S3 features dual-mode Wi-Fi + BLE and built-in AI vector instructions.

- TensorFlow Lite Micro is a minimal inference framework for resource-constrained devices.

- Together, they enable on-device AI tasks such as wake word detection and embedded AI applications — from wake word detection and gesture recognition to sound classification — within a few hundred KB of memory.

This means AI no longer depends on the cloud.

Devices can sense, analyze, and respond locally, even in offline or low-power environments.

1.3 Purpose of this article

This article goes beyond a simple “wake word demo.”

It aims to help developers understand:

- TensorFlow Lite Micro is far more than a wake word tool.

- ESP32-S3 extends AI computation to the MCU level.

- Deploying models on general-purpose MCUs is becoming the new mainstream for low-power intelligent devices.

We will analyze this combination from four perspectives:

technical principles, implementation path, extended capabilities, and system evolution.

2. Technical Principles with ESP32-S3 TensorFlow Lite Micro: From Wake Word Detection to Edge Perception

2.1 ESP32-S3 Hardware Overview

The ESP32-S3 is Espressif’s new-generation IoT MCU with significant improvements in compute power, AI acceleration, low power consumption, and peripheral expansion compared to previous ESP32 chips. It’s particularly suitable for embedded AI and on-device inference.

| Module | Description |

|---|---|

| CPU | Xtensa LX7 dual-core, up to 240 MHz |

| AI / DSP Acceleration | SIMD vector instruction set for convolution and matrix operations |

| Memory | 512 KB SRAM, expandable with external PSRAM |

| Wireless | Wi-Fi 2.4 GHz + BLE 5.0 |

| Interfaces | I2S, SPI, UART, ADC, PWM, etc. |

| Typical Use Cases | Offline voice recognition, motion detection, sound analysis, vibration monitoring |

The AI instruction set boosts efficiency for CNN and LSTM-style operations,

eliminating the need for a separate AI co-processor or voice chip.

With just one ESP32-S3, devices can “hear,” “detect,” and “understand” their environment.

2.2 What is TensorFlow Lite Micro (TFLM)?

TensorFlow Lite Micro (TFLM) is a lightweight inference framework created by Google for running neural networks on MCUs, DSPs, and other embedded devices.

Its core concept:

Even without an OS or dynamic memory allocation, microcontrollers can still run deep learning models.

TFLM uses static memory allocation and operator registration to load and execute models efficiently within very limited resources.

| Feature | Description |

|---|---|

| Small footprint | Runtime library < 100 KB |

| No dependencies | Works without RTOS, malloc, or filesystem |

| Highly portable | Supports ARM, RISC-V, and Xtensa architectures |

| Quantized model support | Runs int8/uint8 neural networks |

| Custom operator support | Allows user-defined operators and lightweight optimizations |

Its minimalist design fits perfectly with ESP32-S3 TensorFlow Lite Micro delivering AI capability without compromising latency or power efficiency.

2.3 System workflow

When TFLM runs on ESP32-S3, the full wake word or sound classification process looks like this:

--- title: "ESP32-S3 + TensorFlow Lite Micro Workflow" --- graph LR A["🎤 Audio Input(I2S Microphone)"]:::input B["🎛 Feature Extraction(MFCC / Mel Spectrogram)"]:::feature C["🧠 Model Loading(TFLite Micro Model)"]:::model D["⚙️ Inference Execution(TensorFlow Lite Micro)"]:::infer E["📊 Confidence Output"]:::output F["🚀 Action Trigger / Local Event Report"]:::output A --> B --> C --> D --> E --> F linkStyle default stroke:#555,stroke-width:1.8;

This workflow lets developers build custom auditory models

without relying on vendor-locked algorithms.

Example applications include:

- Custom wake words for smart home devices.

- Mechanical noise classification in industrial equipment.

- Environmental sound analysis in wearables.

2.4 Why this architecture is sustainable

Dedicated voice chips are static.

MCU + TFLM systems are evolutionary:

- Models can be retrained and updated anytime.

- Different environments can use different models.

- Cloud training + on-device inference create a continuous feedback loop.

This allows devices to remain adaptable long after deployment.

These principles form the foundation of deploying lightweight AI models on ESP32-S3-based embedded devices with strict memory and power constraints.

3. Implementation Path: Building Local Wake Word Detection on ESP32-S3

A complete on-device wake word system involves five core stages:

- Audio capture and preprocessing

- Feature extraction (MFCC)

- Model design and quantization

- Model deployment and inference

- Performance and power evaluation

With ESP32-S3 TensorFlow Lite Micro, wake word detection can be deployed as an always-on edge AI feature with low power consumption.

3.1 Audio Input and Front-End Processing

(1) Hardware Interface

ESP32-S3 natively supports the I2S digital audio interface,

compatible with common MEMS microphones such as INMP441, SPH0645, and MSM261S4030.

The digital connection avoids analog noise interference, ideal for small devices.

Recommended Configuration

| Parameter | Value | Description |

|---|---|---|

| Sampling rate | 16 kHz | Covers human voice frequency band |

| Bit depth | 16-bit | Balances accuracy and bandwidth |

| Channel | Mono | Stereo is unnecessary for speech recognition |

| Frame length | 40 ms (640 samples) | Matches MFCC feature window |

ESP-IDF provides a full I2S driver with DMA-based buffering.

i2s_config_t i2s_config = {

.mode = I2S_MODE_MASTER | I2S_MODE_RX,

.sample_rate = 16000,

.bits_per_sample = I2S_BITS_PER_SAMPLE_16BIT,

.channel_format = I2S_CHANNEL_FMT_ONLY_LEFT,

.communication_format = I2S_COMM_FORMAT_I2S,

.dma_buf_count = 4,

.dma_buf_len = 256,

};(2) Signal Preprocessing

Before feeding data into the model, apply standard signal conditioning:

- High-pass filtering – removes DC bias

- Pre-emphasis – enhances high-frequency components

- Framing + Hamming window – maintains temporal continuity

- VAD (Voice Activity Detection) – reduces inference frequency during silence

The ESP-DSP library provides APIs such as esp_dsp_preemphasis_f32() and esp_dsp_hamming_window_f32() to handle these steps efficiently on the MCU side.

3.2 Feature Extraction: MFCC

(1) Why MFCC

MFCC (Mel-Frequency Cepstral Coefficients) is the most widely used feature in speech recognition.

It transforms waveforms into perceptually meaningful frequency features,

reducing input dimensionality while preserving accuracy in low-power environments.

(2) MFCC Calculation Flow

- FFT – Compute the spectral energy of each frame.

- Mel filter banks – Map the spectrum to the Mel frequency scale.

- Log transform – Simulate nonlinear human hearing.

- DCT – Extract low-dimensional cepstral coefficients (typically 10–13).

ESP32-S3’s DSP instruction set accelerates FFT and DCT operations,

achieving 2–3 ms per-frame processing at 16 kHz sampling.

3.3 Model Design and Quantization

(1) Model Architecture

Typical TFLM-compatible speech models use compact CNN structures:

| Layer | Purpose | Example Output |

|---|---|---|

| Conv2D + ReLU | Extract time–frequency features | 20×10×16 |

| DepthwiseConv2D | Reduce dimensionality, focus on local features | 10×5×32 |

| Flatten | Flatten tensor to vector | 1600 |

| Dense + Softmax | Output classification probabilities | 2 (yes/no) |

Such models achieve high accuracy with a footprint of 100–300 KB.

(2) Model Training

Developers can use the official TensorFlow Speech Commands Dataset:

speech_commands | TensorFlow Datasets

Train custom wake words such as “Hey Lamp” or “Hello Board” using TensorFlow.

(3) Model Quantization

To fit MCU resources, convert the model from float32 to int8:

converter = tf.lite.TFLiteConverter.from_saved_model("model_path")

converter.optimizations = [tf.lite.Optimize.DEFAULT]

converter.target_spec.supported_types = [tf.int8]

tflite_quant_model = converter.convert()

Quantization typically reduces size by 4× with less than 2% accuracy loss.

3.4 Model Deployment and Inference

(1) Embedding the Model

TensorFlow Lite Micro loads models as C arrays:

xxd -i model.tflite > model_data.ccfiles like:

const unsigned char model_data[] = {0x20, 0x00, 0x00, ...};

const int model_data_len = 123456;(2) Inference Loop Example

#include "tensorflow/lite/micro/all_ops_resolver.h"

#include "tensorflow/lite/micro/micro_interpreter.h"

#include "model_data.h"

#define TENSOR_ARENA_SIZE (80 * 1024)

static uint8_t tensor_arena[TENSOR_ARENA_SIZE];

void app_main(void) {

const tflite::Model* model = tflite::GetModel(model_data);

static tflite::AllOpsResolver resolver;

static tflite::MicroInterpreter interpreter(model, resolver,

tensor_arena, TENSOR_ARENA_SIZE);

interpreter.AllocateTensors();

TfLiteTensor* input = interpreter.input(0);

while (true) {

GetAudioFeature(input->data.int8);

interpreter.Invoke();

TfLiteTensor* output = interpreter.output(0);

if (output->data.uint8[0] > 200) {

printf("Wake word detected!\n");

}

}

}

This loop achieves real-time inference at 15–20 FPS on a 240 MHz ESP32-S3 core.

3.5 Performance Metrics and Power Consumption

| Metric | Result | Description |

|---|---|---|

| Inference latency | 50–60 ms | Per-frame recognition time |

| Model size | ~240 KB | After int8 quantization |

| Memory usage | ~350 KB | Including tensors and buffers |

| CPU load | 50–60% | Single-core utilization |

| Power | 120 mA active / <10 mA standby | Suitable for battery-powered devices |

With low-power listening (periodic sampling + event wake-up),

average consumption can drop to 30–40 mA, enabling long-term operation.

3.6 Optimization Tips

- Use fixed input dimensions to prevent memory fragmentation.

- Apply DMA buffering for efficient audio input.

- Simplify post-processing to only output the top confidence label.

- Run dual-core parallelism — one core for inference, the other for sampling and communication.

These optimizations ensure stable, real-time wake word detection without external AI chips.

This implementation approach is commonly used in voice-enabled ESP32 devices requiring always-on listening with minimal power consumption.

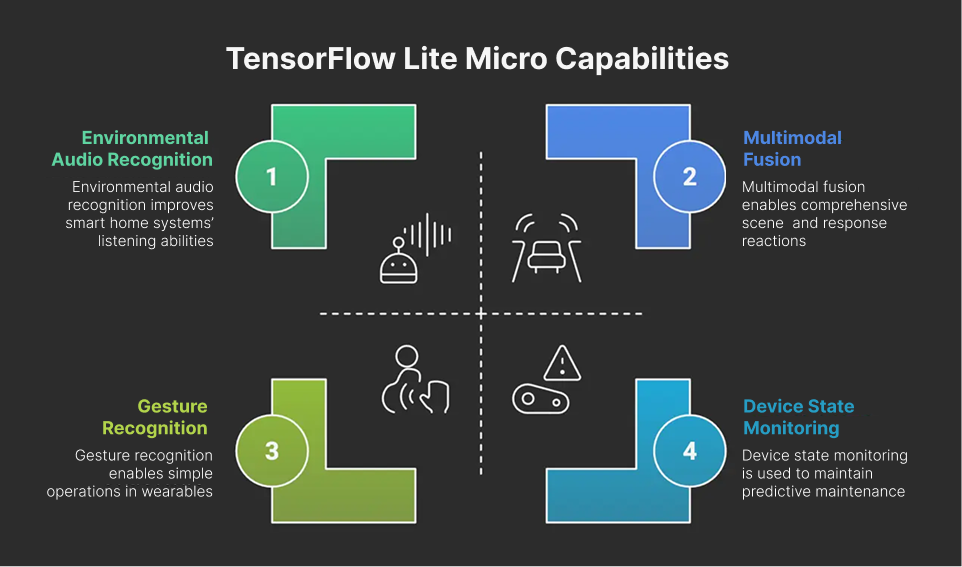

4. Beyond Wake Word: ESP32-S3 Edge AI Use Cases with TensorFlow Lite Micro

Wake word detection is only the entry-level task for TensorFlow Lite Micro.

The real value lies in the fact that the same hardware platform can enable multiple types of intelligent perception simply by changing the model.

This means ESP32-S3 + TensorFlow Lite Micro is not just a voice recognition solution — it is a programmable edge intelligence framework.

Typical ESP32-S3 edge AI applications include:

- Wake word detection for on-device voice interaction

- Sensor-based classification and anomaly detection

- Low-power perception for smart terminals and IoT devices

4.1 Environmental Sound Recognition

In smart home and security applications, environmental sound recognition can greatly enhance a system’s “hearing” capability:

- Detecting events like glass breaking, doorbells, or smoke alarms

- Identifying pet activity or abnormal noises

- Enabling local alarms triggered by acoustic events

These models usually take a one-second MFCC audio sequence as input and output multiple classification results,

for example: ["dog_bark", "alarm", "speech", "background"].

With its NPU vector instructions, the ESP32-S3 performs consistently in such tasks,

achieving real-time processing speeds of about 8–12 FPS.

4.2 Equipment Status and Vibration Detection

Industrial equipment often cannot stay connected to the internet continuously,

yet its sound and vibration data contain valuable diagnostic information.

Using TFLM models, ESP32-S3 can perform on-device pattern recognition to detect:

whether a motor is worn, a fan is imbalanced, or a pump is running dry.

Advantages include:

- High real-time performance – no need for cloud uploads

- Low power consumption – continuous listening consumes less than 200 mW

- Strong security – only anomaly results are reported, preventing data leaks or wasted bandwidth

This form of edge AI inspection is gradually replacing traditional remote sensing systems,

making predictive maintenance feasible even in compact or low-cost devices.

4.3 Gesture and Motion Recognition

If you replace the audio input with an IMU (accelerometer + gyroscope),

TensorFlow Lite Micro can also run lightweight motion recognition models.

In wearable devices, this enables:

- Gesture operations (e.g., wrist raise to wake, hand wave control)

- Posture recognition (walking, running, falling)

- User behavior modeling (usage frequency, movement rhythm)

The ESP32-S3’s dual-core design allows one core to handle sensor data while the other performs inference,

ensuring low-latency real-time motion recognition.

4.4 Environmental Semantics and Multimodal Fusion

TFLM also supports lightweight multimodal fusion,

combining inputs from multiple sensors such as microphones, light, temperature, humidity, and infrared.

This allows the system to determine environmental states like “occupied,” “noisy,” or “secure.”

In smart home or commercial environments, this can enable:

- Automatic volume adjustment based on ambient noise

- Meeting room occupancy detection

- Intrusion or abnormal activity alerts

At this level, ESP32-S3 is not only able to “hear,”

but also begins to understand and react to its environment intelligently.

5. Hybrid Edge and Cloud Architecture for ESP32-S3 Devices

While ESP32-S3 is designed for on-device inference, cloud connectivity can be selectively integrated for model updates, analytics, and fleet management.

One of TensorFlow Lite Micro’s greatest strengths is its ability to form a closed loop between cloud training and device-side inference.

5.1 Roles of Local and Cloud Components

| Stage | Device (ESP32-S3) | Cloud (TensorFlow / Server) |

|---|---|---|

| Data collection | Audio and sensor sampling | — |

| Feature extraction | MFCC / FFT processing | Data cleaning and augmentation |

| Model training | — | Full TensorFlow model training |

| Model deployment | OTA update of .tflite files | Model management and distribution |

| Inference execution | Real-time TFLM inference | Event analysis and statistics |

This separation allows the ESP32-S3 to handle low-latency local inference,

while the cloud manages model training, tuning, and version control.

5.2 OTA Model Update Mechanism

ESP32-S3 supports Over-the-Air (OTA) updates, allowing developers to deliver model files as independent firmware partitions.

When noise profiles, accents, or environmental conditions change,

developers can simply retrain and redeploy a new model via the cloud.

This enables continuous on-device intelligence evolution.

Such OTA-driven adaptability is valuable across smart home, wearable, and industrial IoT devices —

balancing real-time response with cloud flexibility.

6. Production Applications of ESP32-S3 Edge AI

| Scenario | Use Case Description |

|---|---|

| Smart Home | Offline voice control, ambient sound detection, local security alerts |

| Wearables | Gesture recognition, fall detection, voice command input |

| Industrial Monitoring | Motor vibration analysis, anomaly sound detection, predictive maintenance |

| Retail Terminals | Voice-controlled ads, customer interaction systems |

| Agriculture & Security | Animal activity monitoring, noise tracking, acoustic event alerts |

These applications share three core traits:

- Real-time response – No cloud delay

- Low power consumption – Always-on sensing

- Data privacy – Only events are transmitted, not raw audio

By combining ESP32-S3 + TensorFlow Lite Micro, developers can achieve an optimal balance between performance, cost, and privacy in edge AI systems.

7. Comparative Insights

| Aspect | Dedicated Voice Chip | ESP32-S3 TensorFlow Lite Micro |

|---|---|---|

| Function Scope | Fixed wake words / commands | Customizable AI models (voice, sound, motion) |

| Flexibility | Firmware locked | Retrainable and replaceable models |

| Algorithm Openness | Proprietary SDK | Open-source, developer-friendly |

| OTA Capability | Usually unsupported | Fully supports model hot-swapping |

| Application Range | Voice control in appliances | Cross-industry edge AI perception (audio / motion / environment) |

This shift represents a paradigm change in embedded AI.

Choosing ESP32-S3 depends on workload complexity, power constraints, and whether on-device AI acceleration is required.

Instead of buying chips that define functions, developers now define capabilities through models.

The same MCU can “listen,” “detect,” and “adapt” — all through software-defined intelligence.

8. Summary and Takeaways

The combination of ESP32-S3 TensorFlow Lite Micro extends far beyond simple wake word detection.

It defines a new boundary for AI on microcontrollers and edge intelligence, turning low-power devices into intelligent, adaptive systems.

Engineering Perspective

Efficient on-device inference under limited compute and memory.

Quantized, deployable, and reliable — ready for real-world products.

Product Perspective

Updatable models and evolving algorithms enable long product life cycles

and post-deployment improvements through OTA learning loops.

Industry Perspective

Edge AI is no longer confined to high-end SoCs.

Now it scales down to MCUs, empowering smart homes, wearables, and industrial systems with affordable intelligence.

Wake word detection is just the beginning.

As every MCU learns to listen, perceive, and reason locally,

edge intelligence will become a native capability — not an optional feature.

Ready to Bring Edge Intelligence to Your Devices?

Building production-ready ESP32-S3 AI devices requires more than model deployment. Hardware design, firmware optimization, and system integration all play a critical role in reliability and scalability.