Running YOLOv8 INT8 on RK3566 isn’t just a model conversion task—it’s a system-level alignment challenge. This article explores how quantization, operator compatibility, and detection head design define whether real-time inference is possible on RK3566, and under what strict conditions.

Why YOLOv8 INT8 Can’t Just Run on RK3566

What's the Real Issue with Combining RK3566 and YOLOv8

In many edge AI projects, RK3566 is often seen as a “cost-effective platform that can also handle some AI tasks.”

Its positioning isn’t aggressive: controllable power consumption, full peripheral support, and limited but non-zero compute power. This means one thing—it’s not designed for complex models with compute headroom.

YOLOv8 Detection sits in a delicate spot.

It’s no longer a “lightweight, run-anywhere” model but is still far from server-level detection models. In theory, it belongs to the “just about doable” category for RK3566.

In practice, this assumption often fails during deployment.

Many projects run smoothly at the model stage:

ONNX exports fine, PC-side inference is normal, and the structure doesn’t look too complex. But once converted to RKNN and deployed on-device, performance drops—unstable FPS, high CPU usage, and rapid system resource exhaustion.

This isn’t due to a single parameter being off—it’s a deeper issue:

RK3566 is not a platform that brute-forces inference. Usability depends on having a clean execution path.

Why Floating-Point Inference Has Little Value on RK3566

Running YOLOv8 Detection in FP16 or FP32 on RK3566 usually leads to predictable results:

The model runs, but runs poorly.

This isn’t an “implementation issue” of RKNN or NPU, but a design logic issue of the platform.

On RK3566, the NPU isn’t a fully independent compute unit.

If there are unsupported operators in the model, execution falls back to the CPU. Detection models often include such unsupported operations.

With floating point, the issues worsen:

- Low NPU coverage for FP ops

- Constant data movement between CPU and NPU

- Fragmented inference, high scheduling overhead

Result:

Single-digit FPS with near-maxed system load.

In this state, optimizing FP16 further is meaningless.

It’s not under-tuned; it’s the wrong execution path for this hardware.

INT8 Isn’t a “Bonus” — It’s the Entry Point

Switching to INT8 reveals RK3566’s true nature.

INT8 isn’t just about precision—it unlocks the most stable, fully supported execution path on RK3566.

In INT8 mode:

- Operator mapping success rate rises

- Ops can stay in the NPU for longer

- CPU acts more like a scheduler than a compute unit

Now, YOLOv8 Detection starts “really running on the NPU,” not just “partially using the NPU.”

But INT8 isn’t zero-cost.

Detection models are sensitive to quantization, especially in the Head. Poor quantization leads to missed detections or box jitter.

So the real question isn’t “should we use INT8,” but:

How far can YOLOv8 Detection go on RK3566 with INT8—and what are the boundaries?

YOLOv8 Detection’s Structure Determines RK3566 Compatibility

YOLOv8 Detection isn’t structurally complex—but its complexity concentrates in subtle areas.

Backbone is usually fine.

As long as channel counts and input sizes aren’t extreme, RK3566’s NPU handles it stably.

The real issues appear in the later stages:

- Irregular scale changes during feature fusion

- Unpredictable Concat and Upsample combinations

- Unnecessary tensor ops in the Detection Head

These are legal in ONNX but can prevent the RKNN compiler from statically fixing the compute graph.

Most failures aren’t due to model size but structural elements the compiler can’t resolve.

If the graph can’t be fully static, NPU advantages disappear quickly.

If your model fails during RKNN compilation, the issue may lie in unsupported operators. See this ONNX opset compatibility reference for details.

Structure First — It’s a Prerequisite for INT8 Success

On RK3566, if structure doesn’t serve the execution path, quantization only helps partially.

Repeatedly validated practices include:

- Fixed input size is more important than flexibility

- Dynamic shapes offer less benefit than cost here

- Simpler Detection Heads yield more stable INT8 results

These aren’t flashy choices—but they aim for one thing:

Keep the entire inference inside the NPU without interruption.

If the path breaks, even aggressive quantization can’t fix the overall performance.

How YOLOv8 INT8 Actually Runs on RK3566

What Happens Between Model and Device

Placing a YOLOv8 Detection model onto RK3566 isn’t determined by export, but by the in-between steps often oversimplified.

From PyTorch to on-device execution, three major changes occur:

- Graph gets compressed into statically analyzable form

- Data precision maps from float to fixed point

- Execution path splits between NPU and CPU

Any “gray area” here nearly guarantees performance issues.

INT8 adds clarity to this path.

RK3566’s INT8 support goes beyond compute—it affects compilation, scheduling, and caching.

For a full walkthrough of exporting, converting, and deploying YOLOv8 models to RK3566, see our deployment guide.

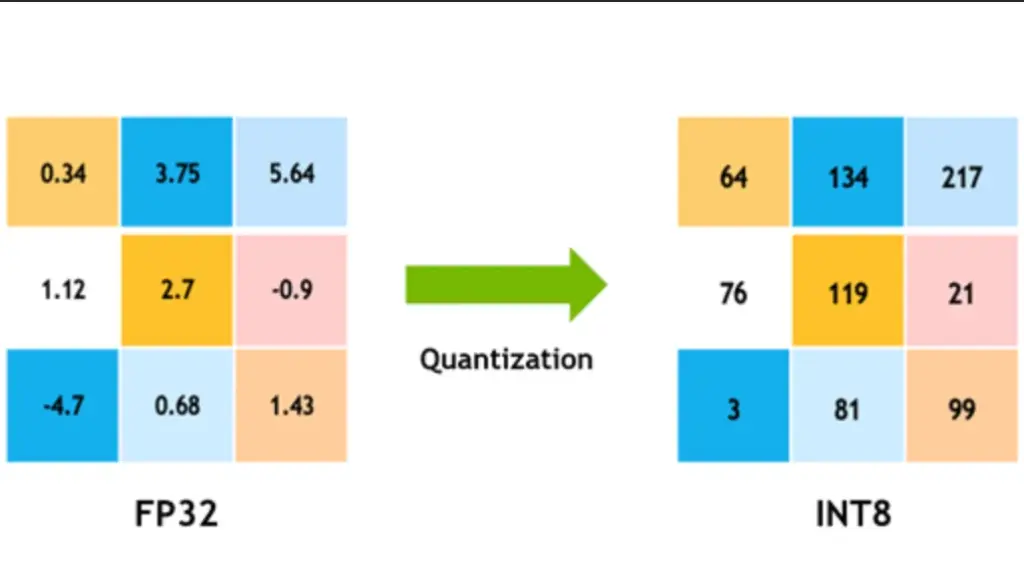

INT8 Quantization Isn’t a One-Click Step

Many first-time users of RKNN INT8 think it’s just a switch:

Enable INT8, feed a few images, done.

But it’s more like a filtering process.

Calibration data doesn’t “train” the model; it constrains value ranges.

Detection models are highly sensitive to feature distribution shifts, especially with large object size variation.

If calibration data poorly match real scenes, issues like:

- Small object confidence drops

- Box jitter across frames

- Higher false positives in complex backgrounds

These problems usually occur in the Detection Head, not the Backbone.

Detection Head Is the Make-or-Break Point for INT8

On RK3566, bottlenecks rarely lie in the Backbone.

The real gap appears downstream.

The Detection Head has:

- Frequent resolution shifts

- Wide numerical ranges

- Extreme precision demands

This makes it most prone to quantization distortion.

Even if Backbone and Neck quantize well, aggressive Head quantization can degrade detection.

That’s why many RK3566 failures stem not from “large models,” but Head structures misaligned with quantization.

Execution Continuity Beats Theoretical Compute

Once on-device, another critical issue surfaces:

Can operators stay on the NPU continuously?

Ideally:

- Input goes into NPU

- Multiple layers execute without switching

- Output returns to CPU

But if an op can’t run on NPU, control switches to CPU.

On RK3566, with limited compute/bandwidth, this cost is high.

INT8 greatly increases the odds of uninterrupted NPU execution.

That’s why INT8 often yields better-than-linear performance gains on the same model.

Quantization Fails Don’t Mean Model Is Bad

Often, models that work fine on PC fail post-quantization on RK3566.

This doesn’t mean model choice was wrong. More likely:

- Inadequate calibration data coverage

- Input size mismatch

- Detection Head too complex

In such cases, simplifying structure—like reducing branches or compressing channels—is more effective than tweaking quantization parameters.

Real-World Limits of YOLOv8 INT8 on RK3566

Test Conditions & Constraints

To avoid misleading results, all tests follow the same setup:

- Hardware: RK3566 (NPU enabled)

- Model: YOLOv8 Detection (no pruning)

- Input Size: 640×640, fixed

- Inference Mode: Single-frame, Batch=1

- Post-Processing: On CPU, not on NPU

We ignore extreme tuning and special trims.

Goal: Evaluate a “reusable in production” YOLOv8 Detection on RK3566.

FPS: INT8 vs FP16 Isn’t Linearly Different

The raw numbers tell the story:

| Model Precision | Inference FPS (640×640) | Stability |

|---|---|---|

| FP16 | 3 ~ 5 FPS | Unstable, high CPU load |

| INT8 | 12 ~ 18 FPS | Stable, sustained |

This isn’t just “INT8 is faster”—it’s a different execution mode.

FP16 triggers frequent CPU fallback.

INT8 allows sustained NPU-only execution.

Only in INT8 mode does YOLOv8 Detection become close to real-time on RK3566.

This holds true across projects; differences arise in scale, not in conclusion.

Accuracy Loss: Localized, Not Global

FPS boost is just the start. Detection accuracy defines usability.

INT8 quantization doesn’t degrade uniformly—it shows structural patterns:

| Scene Type | Accuracy Change |

|---|---|

| Medium/Large Objects | Mostly stable |

| Simple Background | Almost unaffected |

| Small/Dense Objects | Much more sensitive |

| Complex Textures | More false positives |

Thus, INT8 doesn’t “weaken everything”—it amplifies pre-existing weak points.

If the Detection Head and calibration data match your real use case, losses are usually acceptable.

No “Sweet Spot” Between FPS & Accuracy

A common RK3566 myth: find a “perfect FPS with minimal accuracy drop.”

Reality:

- Choose INT8: accept structured accuracy changes

- Or use FP16: sacrifice real-time capability

It’s not a quantization flaw—it’s a hardware limit.

As model complexity nears RK3566’s ceiling, you can’t have both high FPS and full accuracy.

Turning Test Data into Engineering Judgments

Compressing the findings:

- Usable FPS lower bound is ~10 FPS on RK3566

- Below that, load spikes and stability plummets

- INT8 is a must—but not sufficient on its own

- Model structure and Head design define quantized accuracy

These hold across projects—this isn’t anecdotal.

When It’s Worth Using, When to Switch Plans

Combined performance and accuracy limits give us clear lines:

Suitable Scenarios

- Single-class or few-class detection

- Medium+ object sizes

- FPS target of 10–15

- Prioritize response speed over peak accuracy

Not Suitable

- Many small objects

- High precision needed

- Complex post-processing

- Expecting PC-level accuracy on RK3566

In the unsuitable cases, pushing RK3566 further yields little.

You must change model size, hardware, or task design.

YOLOv8 Detection Feasibility on RK3566 (INT8)

| Dimension | Acceptable Range | Typical Issue When Exceeded | Takeaway |

|---|---|---|---|

| Precision Type | INT8 | <5 FPS in FP16/FP32 | INT8 is essential |

| Input Size | ≤640×640 | Larger → nonlinear FPS drop | Fixed input preferred |

| Real FPS | 12–18 FPS | <10 FPS → system overload | 10 FPS = lower bound |

| NPU Utilization | High (continuous) | Frequent CPU fallback | Path continuity > GFLOPs |

| Backbone | Light ~ medium | Rarely a problem | Acceptable |

| Detection Head | Simpler = better | Box jitter / missed detects | Decides success/failure |

| Small Obj. Density | Low ~ medium | High → misdetects increase | Not ideal use case |

| Calibration Data | Scene-aligned | Misaligned → accuracy loss | Critical for INT8 |

| Long Runtime | Stable in INT8 | FP16 fluctuates | INT8 is sustainable |

Final Judgment

If only one takeaway matters, it’s this:

RK3566 can run YOLOv8 Detection—if you accept INT8 and understand its limits.

It’s not a failure platform, nor “AI-ready by default.”

When model, structure, and expectations align, RK3566 delivers stable, predictable results.

Push beyond its limits, and both performance and accuracy collapse.

Quick Decision Guide (Matrix)

| Your Need | RK3566 + YOLOv8 INT8 Recommended? |

|---|---|

| Few-class detection | ✅ Yes |

| Medium object sizes | ✅ Yes |

| Realtime (≥10 FPS) | ✅ Yes |

| Many small objects | ❌ No |

| High-precision localization | ❌ No |

| Complex post-processing | ❌ No |

Key Takeaways

- INT8 is the starting point for YOLOv8 on RK3566

- FPS gain comes from execution path shift, not compute boost

- Accuracy loss centers on Detection Head and select cases

- Once platform boundaries are clear, decisions become simpler

RK3566 can run YOLOv8 INT8—but only when you design within hard boundaries. From quantization to execution path planning, success depends on matching model constraints with RKNN’s capabilities and the NPU’s limited flexibility. Push past those limits, and the system fails predictably.

Looking to deploy object detection in constrained edge environments, such as the RK3566?

ZedIoT builds custom AIoT pipelines designed for real-world constraints—see our Edge AI system capabilities to explore what we deliver on RK3566 and beyond.

FAQ

Q: What is the optimal inference mode for YOLOv8 on RK3566?

A: INT8 quantization is the only viable mode. It ensures maximum NPU utilization, minimizes CPU fallback, and enables 12–18 FPS, compared to 3–5 FPS in FP16.

Q: Why is RK3566 not suited for floating-point inference?

A: Floating-point ops are only partially supported by the RK3566 NPU. Unsupported ops get routed to the CPU, leading to fragmented execution and low performance.

Q: Where does INT8 quantization most affect YOLOv8 accuracy?

A: In the Detection Head, due to frequent resolution changes and high precision needs. It’s the most fragile area post-quantization.

Q: How can I make sure my YOLOv8 model survives INT8 quantization?

A: Use calibration data that mirrors deployment scenarios, fix input resolution, and simplify the Detection Head architecture.

Q: Is there a performance-accuracy sweet spot for YOLOv8 on RK3566?

A: No. You either accept INT8’s structured accuracy loss or fall back to FP16 with unacceptably low FPS. It’s a binary choice dictated by platform limits.